Conversations lay the path to action, and a quick chat often unlocks timely answers that can save untold effort and energy. Moving forward then feels lighter and easier. Knowing where to begin helps overcome friction, and the more natural an interface to a complex system, the easier it is for anyone to get started.

In the past, we’ve talked about Why Conversational AI is Key For Enterprise, but with the recent integration of ChatGPT into Marvis (our Virtual Network Assistant), the ability to summarize and answer questions across all of Juniper Networks’ public documentation footprint means a quantum leap forward for operator experience and understanding.

Marvis’ capabilities have now exceeded natural language understanding (NLU) to gain natural language generation (NLG). This allows IT teams at any experience level to use natural language to ask Marvis questions and now receive natural language responses. Marvis can now pivot from providing insights and interactive troubleshooting to solving an even wider and richer set of challenges with just the right amount of information delivered at the right time. Additionally, Marvis can now answer questions regarding “how,” “what” and “why.”

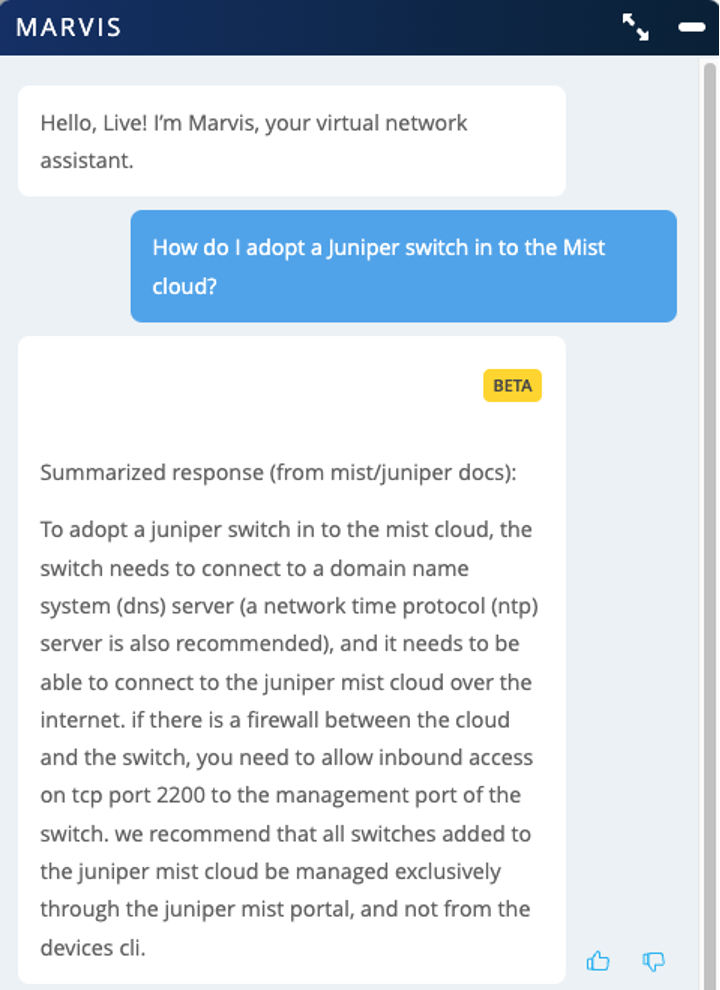

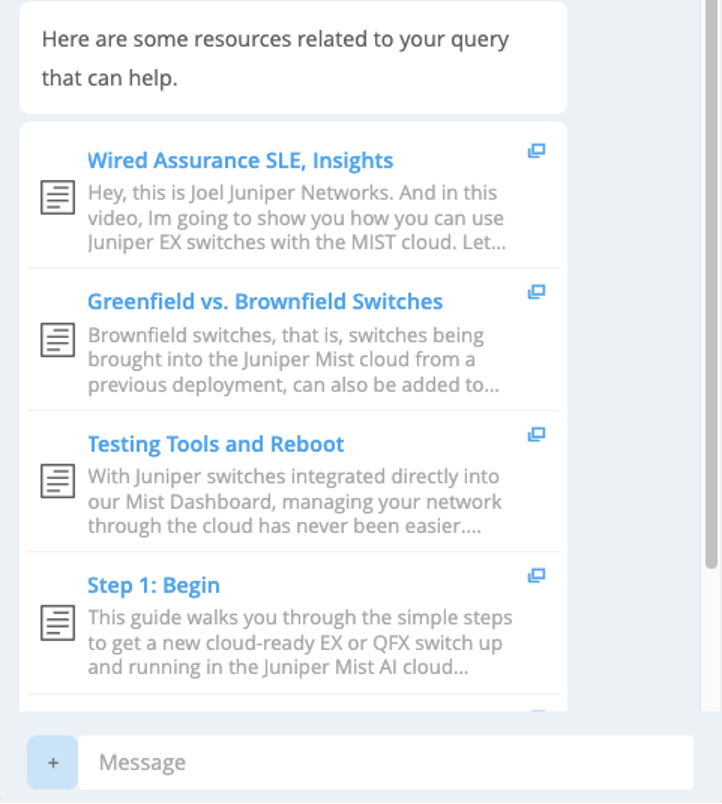

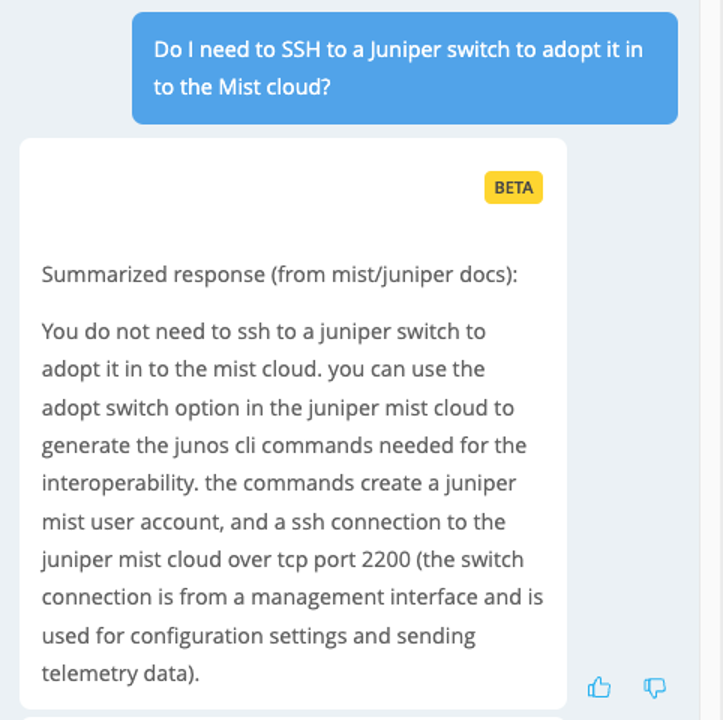

As stated previously, you can ask natural language questions about your network state to Marvis and perform troubleshooting, and you can now also get summarized natural language answers about products and services according to your specific questions and context. Relevant Juniper documentation search links are still provided as an addendum in the chat window, but now your 24/7 virtual team member can help with almost anything Juniper technology-related.

You can seek clarification as you go and dive deeper when you need to (as shown below)…

But how does Marvis actually integrate ChatGPT(Chat Generative Pre-trained Transformer) to synthesize and summarize all the Juniper public documentation and generate natural language answers? Marvis leverages a technique called Retrieval Augmented Generation (RAG), but before diving into that, let’s do a quick recap of what ChatGPT actually is.

What is ChatGPT?

To answer the question, “What is ChatGPT”, let’s ask OpenAI’s public ChatGPT (v3.5) to answer the question in its own words:

ChatGPT is a variant of the GPT (Generative Pre-trained Transformer) language model developed by OpenAI. GPT models are designed to understand and generate human-like text based on the input they receive. They are part of a broader category of AI models known as natural language processing (NLP) models.

ChatGPT, in particular, is fine-tuned and optimized for generating conversational responses. It is trained on a large corpus of text from the internet and is capable of understanding and generating human-like text in a conversational context. ChatGPT can be used for a wide range of applications, including chatbots, virtual assistants, customer support and more, where natural and engaging interactions with users are desired.

It’s important to note that there may be multiple versions of ChatGPT with varying capabilities and training data.

So, ChatGPT is a machine learning (ML) model you can interact with. Specifically, it’s a large language model (LLM) used for generating natural language interactions.

Integrating ChatGPT with Marvis

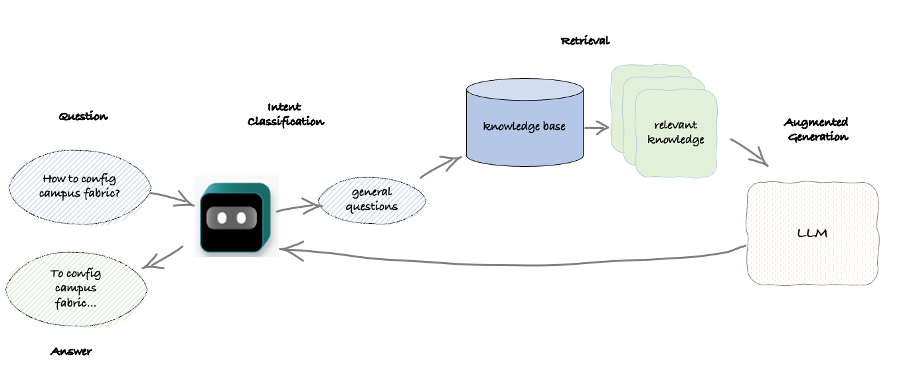

As part of its underlying chatbot framework, Marvis now integrates ChatGPT, including techniques for accuracy and quality which involve both prompt engineering and RAG. This enhances Marvis’ capabilities even further to cover questions about anything in Juniper’s public documentation via a custom knowledge base.

During each interaction, the user’s intent is classified as usual, but Marvis now handles questions of a more general nature. Queries not directly related to troubleshooting, finding site elements or using Marvis Actions result in the query being passed to the retrieval system and then onward to ChatGPT with some custom prompts.

This retrieval system is a customized knowledge base of all publicly facing Juniper documentation updated daily. This indexed repository of domain-specific knowledge is aware of any changes that occur, enabling ChatGPT to be supplied with the latest and most relevant custom content as required.

RAG and ChatGPT

Although ChatGPT is considered a huge leap forward in AI (Artificial Intelligence) and natural language processing (NLP) relating to its size, complexity and usability, it still has some flaws and can sometimes “hallucinate” or make up answers.

These flaws relate to the fact that ChatGPT knows how words go together, but it’s not actually sentient, nor does it conceptually understand the content it’s been trained on. Some of these deficits can be corrected and minimized for specific domains by using the technique of Retrieval Augmented Generation (RAG) to improve the quality of its answers. Using up-to-date facts, snippets and specific domain knowledge as additional input to ChatGPT helps to ensure accuracy and trust are preserved.

Let’s dig a little deeper into ChatGPT and RAG by again directly asking OpenAI’s ChatGPT interface; “How is Retrieval Augmented Generation used with ChatGPT and why?”:

Retrieval Augmented Generation (RAG) is a technique used to enhance the capabilities of models like ChatGPT by integrating information retrieval mechanisms. It combines the strengths of both traditional information retrieval and generative language models to provide more contextually relevant and accurate responses in various applications. Here’s how Retrieval Augmented Generation is used with ChatGPT and why it’s beneficial:

Contextual Understanding: ChatGPT, while powerful, might not always have access to the most up-to-date information. By integrating information retrieval, ChatGPT can pull in recent and contextually relevant information from external sources to better understand and respond to user queries.

- Fact Verification: Retrieval mechanisms can help verify facts and provide authoritative information. When a user asks a factual question, ChatGPT can retrieve information from reliable sources to confirm the accuracy of its responses.

- Generating Diverse Responses: ChatGPT can sometimes generate generic or repetitive responses. Retrieval Augmented Generation allows ChatGPT to diversify its responses by incorporating information from external sources, making the conversation more informative and engaging.

- Real-time Updates: Information retrieval allows ChatGPT to stay updated with current events and developments, making it more useful for tasks that require real-time information, such as news updates, sports scores or stock market data.

- Customization and Personalization: Retrieval mechanisms can be used to pull in personalized information about users, such as their preferences, previous interactions or user-specific data, to provide more tailored responses.

OpenAI’s ChatGPT goes on to explain:

The process of using Retrieval Augmented Generation with ChatGPT typically involves the following steps:

a. Retrieval: ChatGPT sends a query to an external retrieval system, which searches for relevant information in a database or on the internet.

b. Ranking: The retrieved information is ranked based on relevance and importance, ensuring that the most pertinent data is selected.

c. Integration: The selected information is integrated into ChatGPT’s response generation process. This can be done in various ways, such as incorporating it into the response text or using it to influence the generation of the next utterance.

By combining the capabilities of generative models like ChatGPT with information retrieval, Retrieval Augmented Generation aims to improve the accuracy, relevance and informativeness of the responses provided by the model, making it more valuable in a wide range of applications, including customer support, information retrieval and more.

So, now you can ask Marvis about anything in Juniper’s public documentation, be it product or service-related and get a summarized answer.

Prompt Engineering

As part of any query passed from Marvis to ChatGPT, there is also some initial “prompt engineering” applied as guard rails. This helps with generating the desired responses and retrieving the most relevant information. The practice of prompt engineering generally either prefixes additional “prompts” to a query or tweaks a query to include some initial state or boundary conditions intended to enhance the outcome or, in other cases, to limit the scope of a query.

As ChatGPT itself puts it:

In RAG, prompt engineering is crucial for the retrieval component, where you need to formulate queries that help retrieve relevant documents or passages from a large corpus of text. Effective prompts ensure that the retrieved information is aligned with the user’s intent or question.

Continuous Improvement and Reinforcement Learning

Each ChatGPT response from Marvis also includes the ability for a user to supply feedback about the quality of each response, effectively providing a feedback loop for continuous improvement. This feedback goes through the Customer Success team and can trigger the modification of existing documentation or the generation of new documentation if required.

Leveraging AIOps (AI for IT Operations)

There are no wrong questions and thankfully, ChatGPT does not judge. As Marvis continues to evolve and improve as a Virtual Network Assistant by adding capabilities such as NLG to its existing NLU, a more natural engagement with your technological footprint ensues. Finding the right answers and solving problems and issues has never been easier, faster or more natural.

Join us for a live demo of the AI-Driven enterprise here or read more about AI-powered Zoom Troubleshooting and AI-powered RRM enhancements.