Human conversation is complex. When a child continuously asks “why,” they seek clarification and deeper meaning at every turn. It’s through conversation and dialogue that we begin to unlock understanding and discern intent. With dialog, there is logic and shifting states that link and transition from previous sentences. As humans, we are happy to work with partial and incomplete information from the outset, as our questions are rarely fully formed to begin with. It’s only by engaging in conversation that each party can learn about, refine and explore a topic towards a mutually satisfactory outcome. The full context is often lacking during an opening gambit and it must be established through multiple rounds of interaction. The subject, object, intent and related actions can also change throughout the course of a conversation as new concerns arise and fresh understandings are reached. These shifts can sometimes reorient a conversation’s trajectory toward a new goal or result in unexpected outcomes.

Experts unlock information and meaning from computer systems by knowing exactly how to query them and contextualize their outputs. They use privileged and esoteric commands laced with keywords. Often, adjacent teams must then piece together a picture of what’s going on via interactions with their colleagues or attempt to use rigid interfaces themselves. Rather than spend time learning a new language every time we engage with a new interface, it would be preferable to just use natural language (either text or speech) to query and engage with complex systems and platforms under our care. We are more comfortable with the familiar and thus less fearful of making mistakes (or of becoming intimidated and embarrassed) when we get to use what we already know and are confident with. This is one of the significant benefits of using Intelligent Conversational Assistants. As they evolve from simple chatbots to virtual assistants and beyond, they add truly intelligent interfaces and services to an organization. By lowering the bar for access and engagement, outcomes are delivered more efficiently and effectively. Many tout real AI and Intelligent Conversational Assistants, but do they deliver, are they trusted and if so, how?

Dialogue gives rise to better and more complete answers through branching and twisting forms that allow each entity to better understand the other’s position. Rarely can all the information required by both parties be communicated upfront, especially when troubleshooting, as we don’t always know where to start and lack the fidelity or understanding necessary at a particular point in time.

The Evolution to an Intelligent Conversational Assistant (CI)

One of the biggest challenges to unlocking the benefits of AI and AIOps revolves around building and maintaining trust. Trust is a complicated topic, yet for the adoption of any AI-driven system to be successful, establishing and maintaining a level of trust with the platform is paramount. Trust can be problematic for human-to-human interactions, yet it’s even more challenging for human-to-AI engagements where skepticism of black-box solutions and industry-wide AI-washing undermines progress. A user or operator must trust an AI engine (and its interface) for utility to be realized. Adoption then depends on this trust which is built on the pillars of consistency, transparency and explainability. This explainability equates to the system or platform being able to demonstrate and prove the validity of its answers or recommendations. Proof, when requested, then requires a multi-step and multi-state engagement where context and clarity are crucial.

So to build this relationship, trusted AI must be able to explain its reasoning. This may take the form of presenting supporting data or refined information to make the case for its claims. The user interface must facilitate this complex messaging for effective communication with the user to take place. While AI and machine learning (ML) models and algorithms may take many forms, it’s the interface that delivers the actual communication at the point of use. This interface is then the fulcrum on which all user experience, relationships and trust pivots. If there’s a lack of understanding, an unusual recommendation or a scenario where the computer blankly says “no”, we want to question the assertion, understand the “why” and enter into dialog with the system to reassure ourselves of the correct path.

This level of engagement and “explainability” is the skeleton key to unlocking trusted AI for use in AIOps. Natural Language Technologies (NLT) are increasingly helping to deliver on this promise, but as always, it’s the implementation and execution that are key.

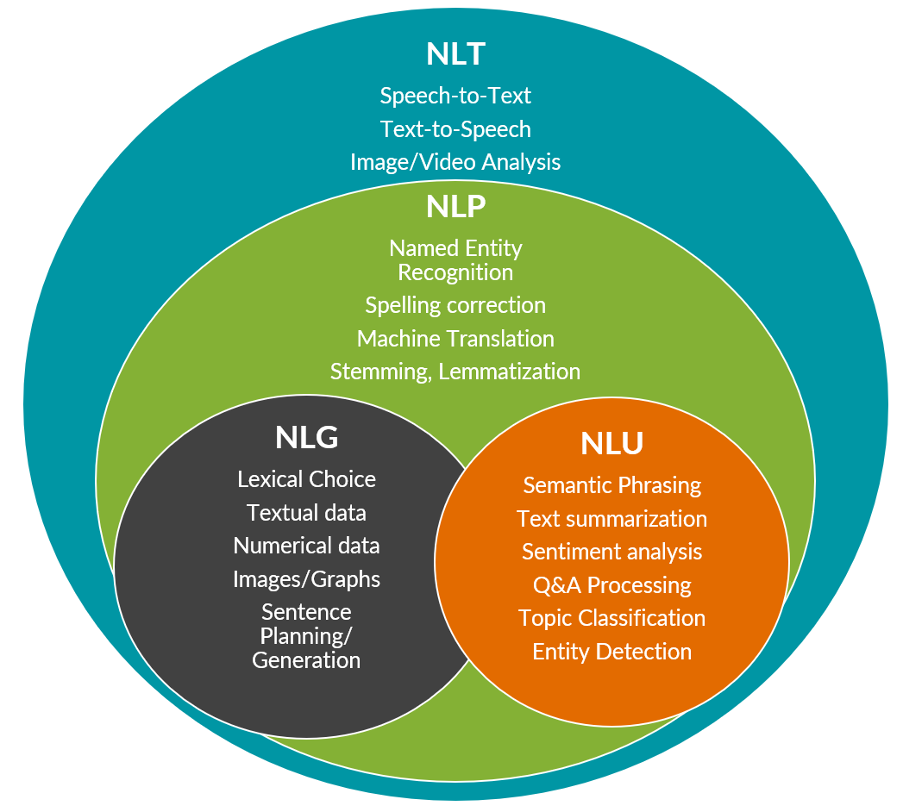

When we break down the categories related to AI and, more specifically, Natural Language Technologies (NLT), we see that Natural Language Processing (NLP) is comprised of Natural Language Understanding (NLU) and Natural Language Generation (NLG). When combined, they form parts of a Conversational Interface using a dialog management framework to orchestrate almost all interactions.

Establishing the scope, intent and outcome desired by a user is itself a non-trivial task. When ongoing state is involved, i.e. more than a single question is required by either party, the realm of dialog management escalates the challenges. Answering questions now depends on an evolving context that gets established from previous interactions. This forms a complex chain and trajectory throughout a “conversational” state space that leverages multiple solutions to make “sense”.

In specialist fields like data networking, processing a question or query about the state of the network (like reasons for a non-optimal user experience) requires that the system can extract different types of entities from any question, including (but not limited to):

- site names and network boundaries

- device types, models, operating system, names/labels, and their locations

- times and durations

- users and usernames

- MAC addresses, IP addresses, VLANs

- event types, actions and outcomes

- query topics

Additionally, and irrespective of the footprint and network graph, there is also an ontology required for tying together the concepts, relationships, data and entities that facilitate understanding the intent from a natural language request or query. This is only one part of the challenge required to democratize troubleshooting and provide access to users of all experience levels.

Lessons for Conversational AI

Conversation is a continuous proposition that depends on shared logic and involves arriving at a common state. Still, when dealing with a single iteration of question answering, a platform may make some assumptions to fill in the gaps or missing context in a user’s query. If a request is specific and direct enough, the system may correctly and immediately answer, but often knowing the right question to ask upfront can be a challenge. Missing context and a lack of initial detail can mean a user would typically have to start the whole questioning process again. This becomes a moot point if you have a conversational interface using dialog management.

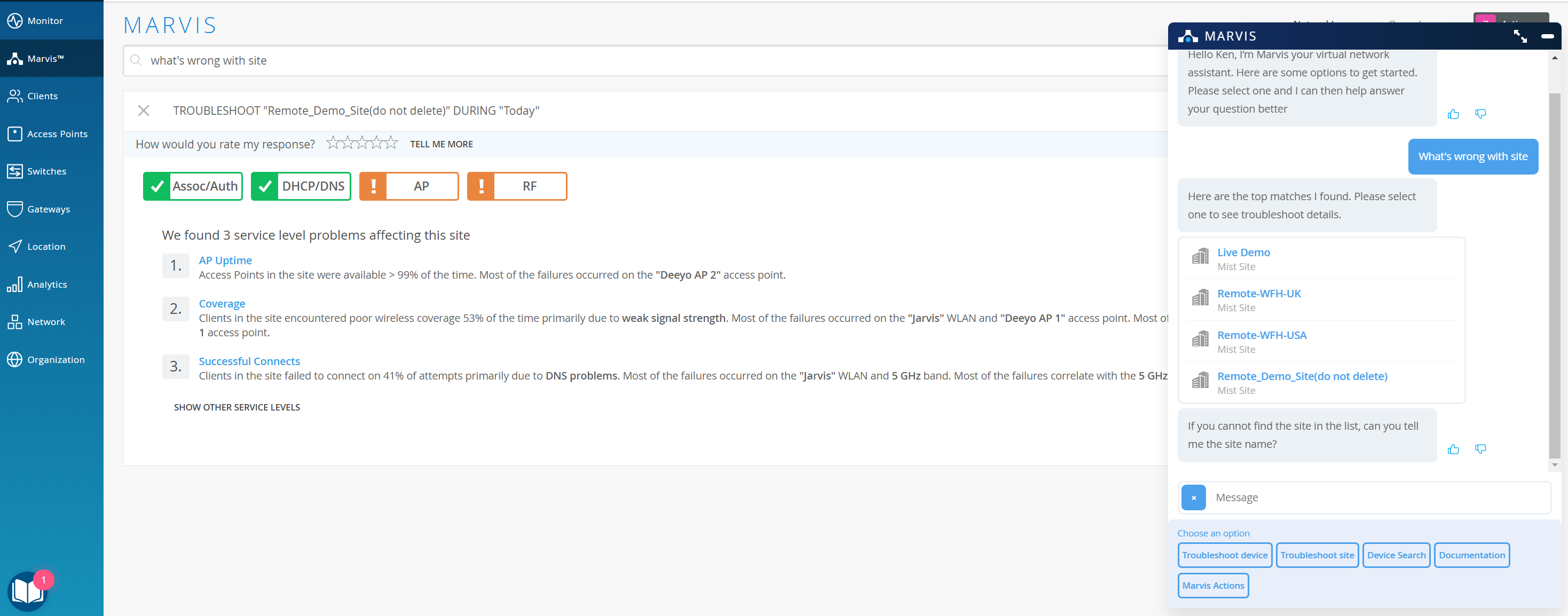

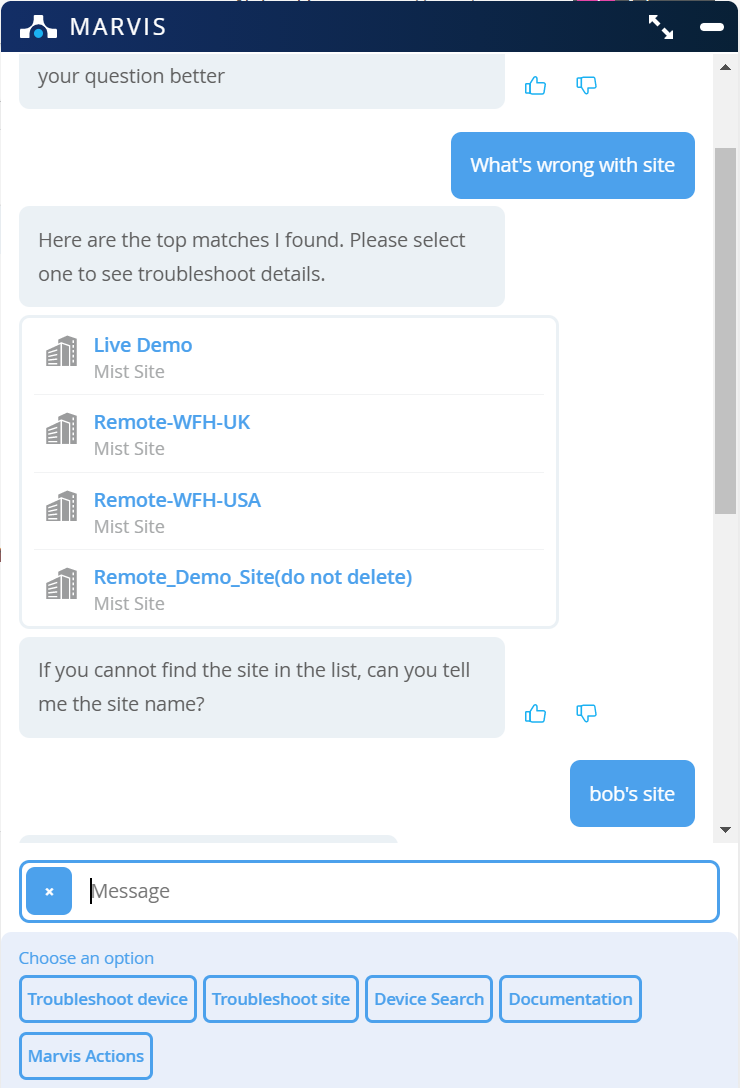

In the below example, we see a user asking Marvis, the Virtual Network Assistant, the same question via two different interface types, on the left-hand side is a standalone Q&A interface and on the right-hand side is a conversational interface.

The interface on the left resembles a more traditional search-like dialog box. The query “what’s wrong with site” is processed and transformed to; ‘ TROUBLESHOOT “Jonathan’s Site” DURING “Today” ’ where Marvis has made some assumptions about the location, intent and relevant time period. So, what was the user’s true intent? It wasn’t a product or document search, so troubleshooting was a safe fallback. Entity Detection knew it was a query about a location, but which specific site label should Marvis use and what sort of problem is the user experiencing? Rather than repeat the question with increasingly specific parameters (or have the user lookup a list of sites and try again), surely the platform could engage in some sort of dialog and help us to refine the query naturally. It could then qualify any gaps along the way, just like we would when talking to a human assistant.

In the interface on the right, we see that the conversational interface asks us to qualify the site in question by automatically searching for and suggesting sites. This conversation feels more natural and continues with renewed context once a location is nominated.

This level of NLU leverages multiple models along the way and relies on a multi-step pipeline to extract and refine entities, time periods and intent. Features are extracted using a whole range of tokenizers and subsequent “featurizers” that start with basic tidying. Examples include removing whitespace and regex-based special word extraction. It then continues with matching for lexical and syntactic structure, entity and synonym detection and applies a range of classifiers that depend on the overall ontology and network graph.

It’s dialog management that keeps the state of the conversation, makes the transitions and manages the dialog flow as it progresses through each interaction. Dialog management’s logic rapidly refines the context (including supporting suggestions) and makes interfacing with complex systems easy. Problems become simpler for everyone to engage with, solve and then fix or communicate to others. Although supporting data and documentation can also be surfaced, it’s the that removes the need for teams to learn a new syntax or specialty when troubleshooting their own complex network footprints. Maintaining positive user experiences starts behind the scenes with empowering operations and support teams to better manage and interface with accelerating complexity.

Productization

Developing an intelligent conversational assistant is a complex undertaking. Although there are frameworks and advanced methods like transfer learning that teams can take advantage of to accelerate their efforts, the challenge of delivering value and deploying features remain. While the key tenets of simplicity and usability help bolster trust in a platform, it’s the overall user experience that determines user adoption and stickiness. A user may become an initial advocate but can divert to becoming a detractor based upon a single lousy experience, so instrumenting the complete user journey with a conversational interface is par for the course. Basic metrics like session duration and frequency of use tell part of the story, but the implicit collection of user sentiment tied to explicit feedback is extremely important to gauge user experience.

Suppose a user only has 50 percent confidence that an intelligent conversational assistant will understand a question or provide the correct answer (after the user has already expended energy in the engagement). In this case, trust and usage will rapidly decay. With an 80 percent or greater confidence of a correct or useful answer, user engagement and use will increase over time. This also depends on the basic cadence of expected use and the breadth of services offered. The flexibility and utility of a conversational interface is theoretically only bounded by the depth and breadth of services exposed to it by the underlying AI engine or platform.

Some may say that intelligent conversational assistants are a precursor interface to future artificial general intelligences, but for now, we can rest assured that virtual assistants like Marvis are a perfect and trusted fit for AIOps in the bounded domain of data networking.

Find out more in our whitepaper, “The Business Value of AIOps-Driven Network Management,” or see our infographic on why IT professionals are ready for AIOps!

Join us at Mobility Field Day 6 on July 15, 2021 to hear from our Wi-Fi experts as they share how customers are benefiting from Marvis, AIOps and more.