In a significant leap toward network modernization and energy efficiency, Turkcell, (NYSE: TKC) (BIST: TCELL) Türkiye’s leading technology company and mobile operator, has successfully completed a large-scale Internet Gateway (IGW)

HPE Networking Chief AI Officer Bob Friday and I recently participated in a podcast with Tech Field Day. The starting premise of the show was, “Data center networking needs AI,”

HPE Networking Chief AI Officer Bob Friday and I recently participated in a podcast with Tech Field Day. The starting premise of the show was, “Data center networking needs AI,”

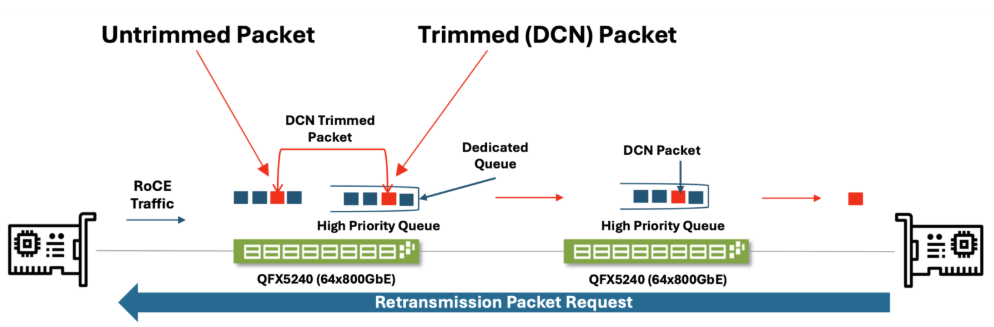

Artificial Intelligence (AI) and High-Performance Computing (HPC) are currently experiencing significant growth driven by advancements in machine learning, natural language processing, generative AI, robotics, and autonomous systems. At the core

At Interop Tokyo 2025, Juniper Networks and Spirent Communications took center stage with a powerful joint demonstration that earned them the coveted “Best of Show” award. The demo showcased the

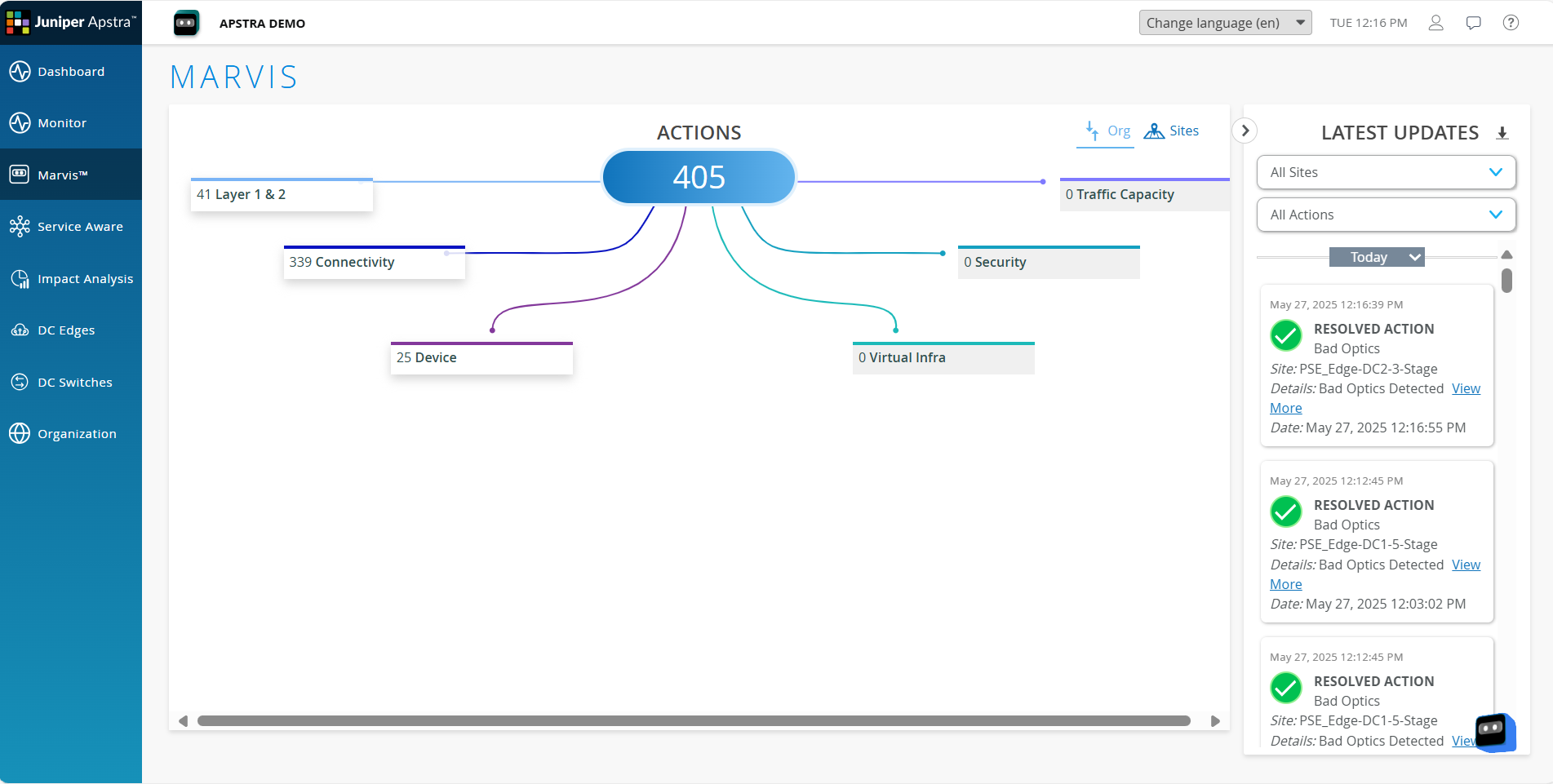

Juniper has cemented its position as an industry leader in delivering innovative data center networking solutions. We have been named a Leader in the 2025 Gartner® Magic Quadrant™ for Data

“AI is going to reshape every industry and every job,” according to Reid Hoffman, Co-founder of LinkedIn, and your data center is the new battleground. This is where Juniper Networks

As CIO of a leading technology company, I have a front-row seat to the ever-increasing demands placed on our IT infrastructure. Business agility and artificial intelligence (AI) are not buzzwords;

In 2024, Juniper Networks raised the bar for network performance and scalability, announcing our first routing solutions to deliver 800G (or double-density 400G) performance. Our new additions to the PTX

Data center networking is HOT, and by many measures Juniper is the hottest vendor in this space. Why now? And why Juniper? First, what is making this space so hot?