Everyone can use a little help sometimes. When facing a complex problem or frequent challenge, if you ask for help or someone proactively offers, do you trust them and why? Will they save you time, clarify a problem, suggest an answer or even solve the issue for you? Can they demonstrate their assistance is evidence-based? When engaging any assistant, challenges tend to pivot around trust, confidence, competence and communication. Trust, like a battery, may begin empty and require charging up before we have the confidence to ask for and receive help. Competence, also a factor in trust, is hard to judge in advance without evidence and each step requires clear communication.

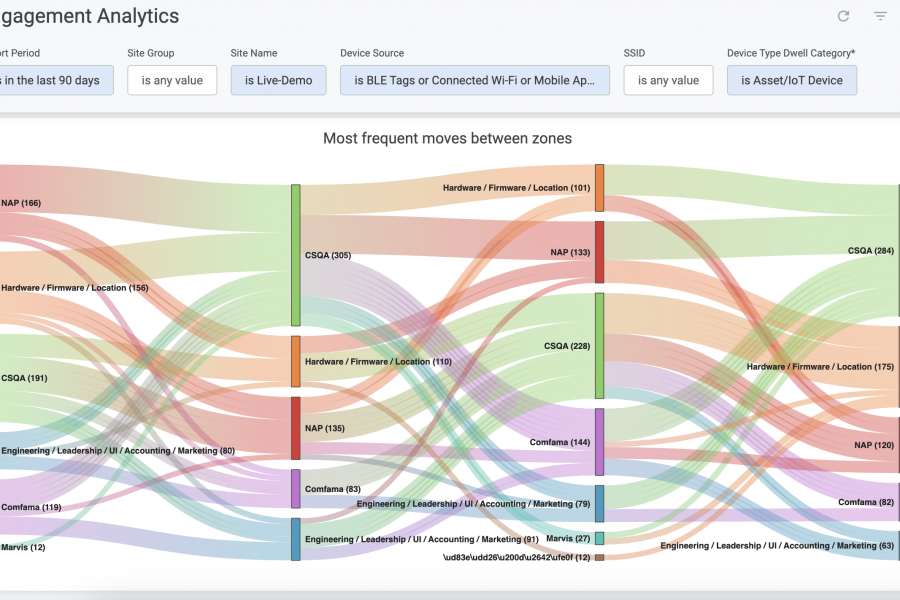

So, what is a Virtual Network Assistant (VNA) and more specifically what is an AI (artificial intelligence) powered chatbot? It’s a helper and an interface to a set of tools and intelligent processes. Often a chatbot is a reactive entity you call on, but increasingly they are proactive too. A user initiates an interaction with a VNA when looking to answer a question, get help with a problem or when trying to pinpoint the root cause of an issue or outage. A VNA performs complex tasks and workflows such as troubleshooting and can correlate data to display trends or patterns in a digestible and actionable format.

When we go deeper into the underlying frameworks that power a new breed of intelligent virtual assistants, we start to appreciate complex relationships between events, triggers, actions and workflows. As trust grows in AI-driven systems we can more readily move from human-initiated interactions, through gate-kept automation and then finally on to fully autonomous closed-loop workflows. This is how AI-driven frameworks provide a path from being reactive to proactive. By rapidly removing toil, leveraging continuous improvement from human feedback and demonstrating repeatedly better outcomes, AI begins to engender trust. However, it is crucial to enable transparency and control to grow and maintain this user trust.

New Interfaces to Complexity

How do you engage with AI, and are you even aware of it when you do? In many modern applications, there are services that are either partly or wholly dependent upon AI engines and frameworks. Machine Learning (ML), a subset of AI, underpins and facilitates many of the innovations just below the surface. Yet as users of a service or product, we must be able to interact and engage with the AI for any utility to be realized. Humans may still reign supreme at many tasks but increasingly we’re democratizing access to AI platforms. Tools like chatbots provide us with new and easier to use interfaces to complexity, enabling us to simplify our queries while enhancing our capabilities. This can accelerate our decision making which leads to increased confidence. But how do we build trust with an AI-powered assistant?

The interface to AI really matters when building a trust relationship. Whether it’s an interactive visualization or a recommendation engine that troubleshoots complex problems (and offers solutions), we need to be able to trust the inferences made. Anthropomorphizing interfaces to look like humans or animals only goes so far. Focusing on the quality and utility of the AI-engine’s outputs and ease of use helps as well, but to build trust, transparency is key. By adopting a framework and interface that allows for the greatest amount of introspection and validation by the user, the black box of AI becomes ever more translucent and builds trust faster.

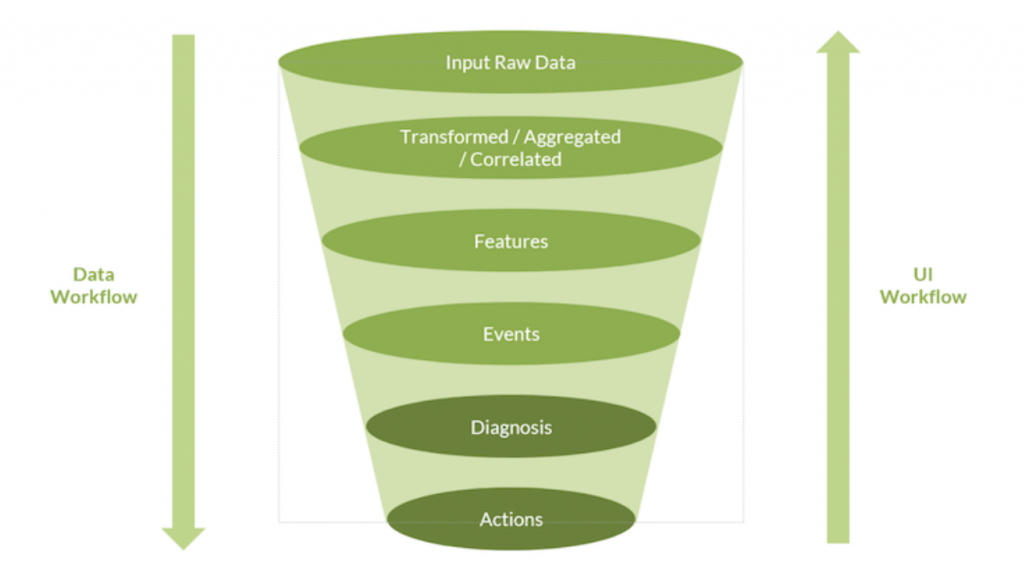

Figure 1. High-level Marvis AI framework

To be useful, initial raw data must eventually result in either a suggested or executed action. Asking a user to trust a wholly autonomous workflow is often too much of a leap to take from the outset, so the answer is to allow a user to step through supporting layers from the bottom up (e.g. Figure 1). By delving further back into events from previous tiers, the UI (User Interface) workflow allows you to peel back the layers all the way to raw inputs. This facilitates transparency and thus trust. A framework that allows domain experts to satisfy their skepticism by digging deeper, while also enabling a novice to search as far as their curiosity goes, allows both beginners and seasoned veterans to establish trust, all the while increasing their productivity and learning. This engagement also forms a virtuous cycle that can be leveraged further to improve and train the AI.

Using the Mist example, the VNA (Virtual Network Assistant) is only one part of the user interface. It lowers the bar for complex querying of network state by allowing a user to ask natural language questions. It then presents them with data visualizations and actionable answers. This solves the customer need of how to query a complex environment about current or past events and immediately identify problems. The VNA surfaces context and root causes for service degradations, allowing for immediate decisions to be made and actions to be taken. This is only one user-driven and reactive mode built on Marvis (the underlying AI-engine), but Marvis also powers semi and fully proactive AI-driven modes of operation. Corrective actions that require user engagement are recommended (i.e. the human still acts as a gatekeeper), but once trusted, Marvis can perform proactive and fully autonomous actions including their subsequent verification. This opens the door to real AIOps (AI Operations) and levels of assurance previously unseen.

Additional blogs in this series

Decision-making with Real AI Beats a 6th Sense Every Time

Anomaly Detection in Networks and a Few Tools in the Box