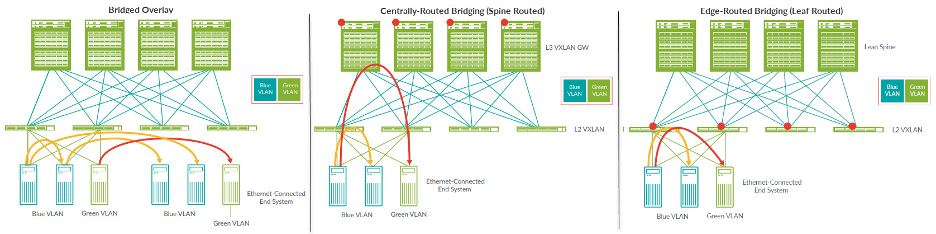

In a previous blog on Getting Started with Modern Data Center Fabrics, we discussed the common modern DC architecture of an IP fabric to provide base connectivity, overlaid with EVPN-VXLAN to provide end-to-end networking. Before rolling out your new fabric, you will design your overlay. In this blog, we discuss the third option shown in the diagram below: Edge-Routed Bridging.

QuickStart – Get Hands-on

For those who prefer to “Try first, read later”, head to Juniper vLabs, a (free!) web-based lab environment that you can access any time to try Juniper products and features in a sandbox type environment. Among its many offerings is an IP Fabric with EVPN-VXLAN topology which includes a data center setup pre-built with an edge-routed bridging architecture.

Simply register for an account, log in, check out the IP Fabric with EVPN-VXLAN topology details page and you are on your way. You’ll be in a protected environment, so feel free to explore and mess around with the setup. Worried you’ll break it? Don’t be. You can tear down your work and start a new session any time.

What is an Edge-Routed Bridging Architecture?

As discussed in a recent blog, the bridged overlay model does not provide a mechanism for inter-VXLAN routing functionality within the fabric. Depending on your requirements, this may be sufficient – or even desired. However, if you want routing to happen within the fabric you need to consider another option.

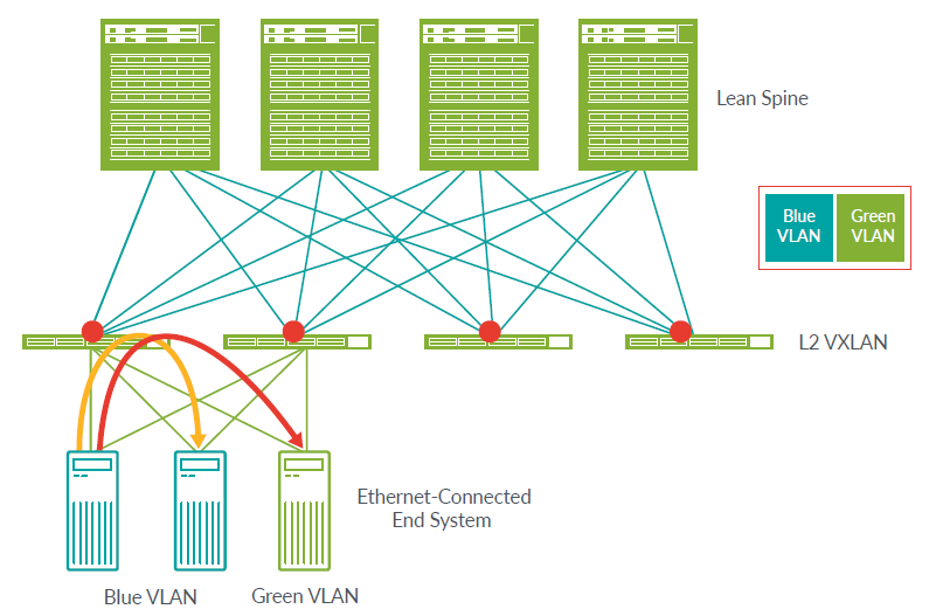

Both the centrally-routed bridging (CRB) and edge-routed bridging (ERB) architectures bring routing into the fabric. The variation with these options is where the ‘intelligence’ lies. With the CRB model, also known as a spine-routed overlay, inter-VXLAN routing is performed at the spine layer. With the ERB model, also known as a leaf-routed overlay and shown below, it’s performed at the leaf layer.

With the ERB approach, both bridging and routing happen at the leaf layer. In the diagram above, traffic flowing between servers in the blue VLAN and attached to the same leaf device can be locally switched at the leaf layer. Traffic between the blue VLAN and green VLAN must be routed, also at the leaf layer. This is helpful when the endpoints are attached to the same leaf device as, unlike the CRB model, there is no need to send traffic any further into the fabric.

In the ERB architecture, the spine devices perform only IP routing in support of the leaf devices. For this reason, they are sometimes called ‘lean spines’.

Why an Edge-Routed Bridging Architecture?

First and foremost, the ERB model is a good option when you want inter-VXLAN routing to happen within the fabric. As far as in-fabric options go, the ERB approach has the advantage of simplifying the overall network as bridging and routing functionality are consolidated at the leaf layer (versus distributing those functions with the CRB model). This removes the need to extend the bridging overlays to the spine devices, leaving them to handle only IP traffic.

The ERB model is optimized for DCs with lots of east-west traffic and, for large scale DCs built in ‘pods’, intra-pod traffic. In particular, it provides lower latency between endpoints on different VLANs connected to the same leaf device. Unlike the CRB model, where inter-VXLAN traffic must be routed at the spine layer, traffic flows from an endpoint upstream to its attached leaf device, which routes the traffic and forwards it directly to the destination endpoint with no additional hops.

When device failures occur, the ERB model is less impacted and provides faster reconvergence. In a CRB architecture, a few spine devices contain all of the DC’s routing information as well as the inter-VXLAN gateways (IRB interfaces), so a spine failure triggers a significant reconvergence process. Since routing is distributed across many leaf devices in the ERB architecture, a device failure has a smaller impact, or ‘blast radius’, so reconvergence can happen more quickly.

Another reason to use the ERB approach is that it allows you to use more affordable spine devices. Since the spine layer aggregates leaf-layer connections, port density is an important factor. However, since inter-VXLAN routing is performed at the leaf layer, the spine devices don’t require that functionality. This means you can consider older-model switches and/or more port-dense devices at the spine layer.

Overall, Juniper recommends using the edge-routed bridging architecture when possible.

Implementing an Edge-Routed Bridging Overlay

With any EVPN-VXLAN architecture, you must configure some common elements (we described this in a previous blog, Getting Started with Modern Data Center Fabrics), including:

- BGP-based IP fabric as the underlay

- EVPN as the overlay control plane

With an ERB overlay, the (lean) spine devices handle only IP traffic so you’re all set with just the configuration above. The leaf devices require additional configuration elements to enable VXLAN as the overlay data plane, including:

- Enabling VXLAN and supporting parameters

- Adding IRB interfaces and inter-VXLAN routing instances

- Mapping VLAN IDs to VXLAN IDs

- Assigning VLANs to the interfaces connecting to endpoints

With that, we’ve covered the basics for using an ERB overlay architecture. There are plenty of other details to consider, but this will get you started. We’ll discuss other architectures in a future blog. In the meantime…

Keep Going

To learn more, we have a range of resources available.

Read it – Whitepapers and Tech Docs:

Learn it – Take a training class:

- Juniper Networks Design – Data Center (JND-DC)

- Data Center Fabric with EVPN and VXLAN (ADCX)

- All-access Training Pass

Try it – Get Hands-on with Juniper vLabs