It may be no surprise that as a CIO, the most discussed topic among my peers is artificial intelligence. But the banter isn’t only about the possibilities and where to invest first; it’s also largely about how to get AI right.

With all the excitement about how it can make businesses run more efficiently and improve productivity like never before, you wouldn’t be doing your job if you weren’t seriously engaging with this technology. Yet big questions remain around bias, governance, and security. This has IT leaders struggling with the balance between the fear of missing out (FOMO) and the fear of messing up (FOMU).

Sound familiar?

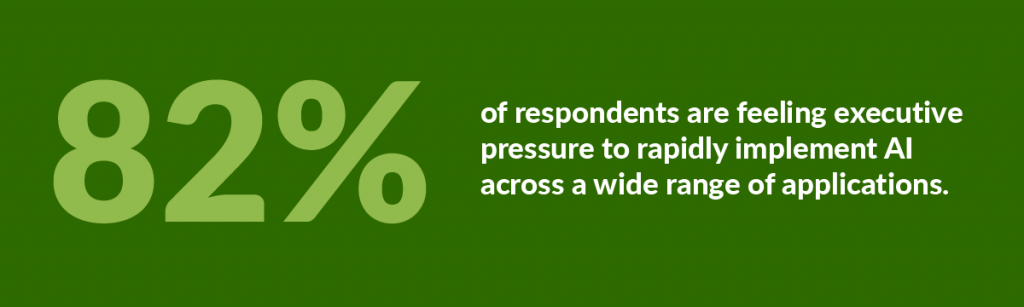

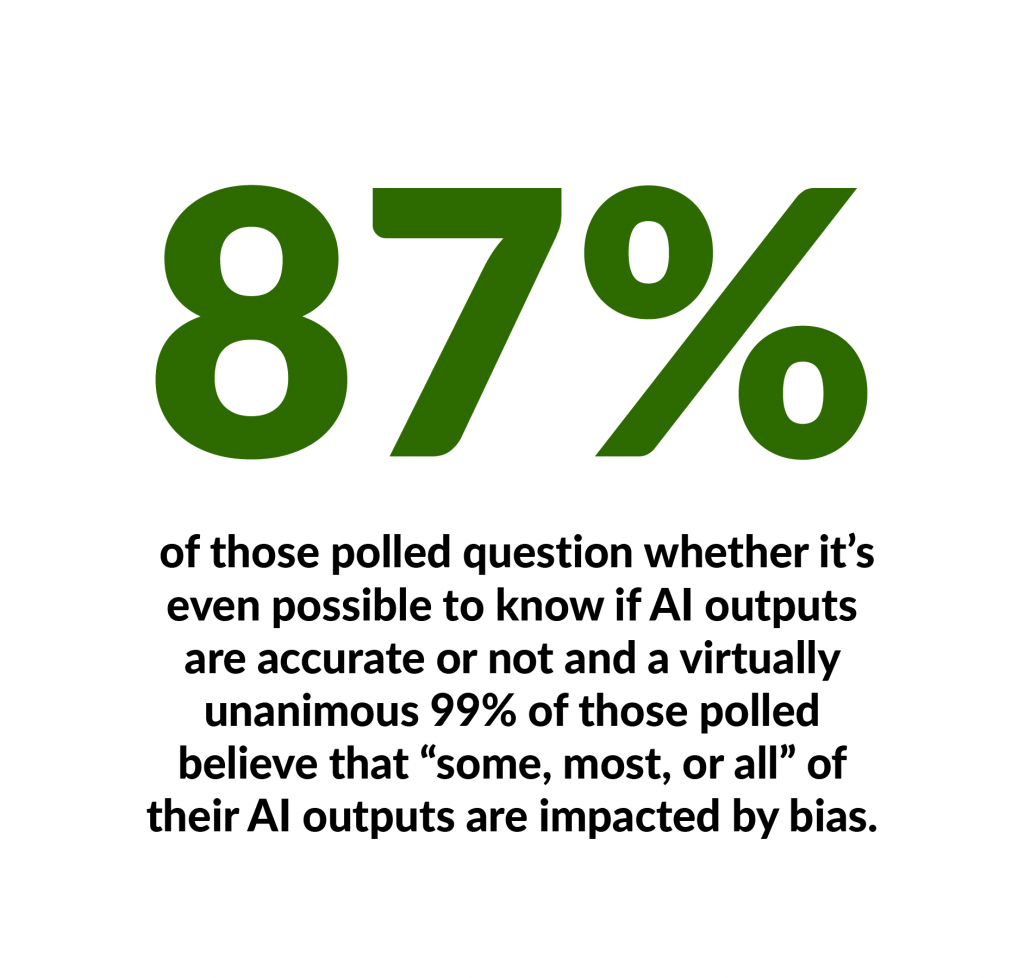

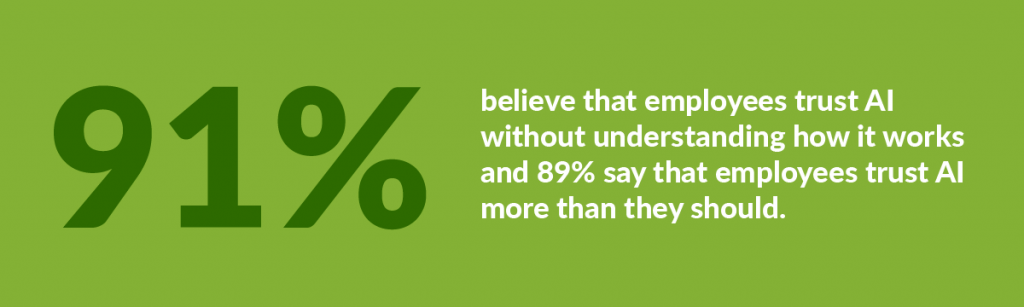

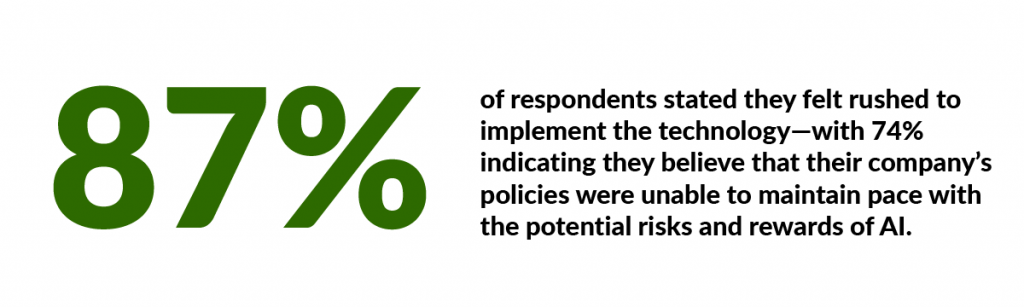

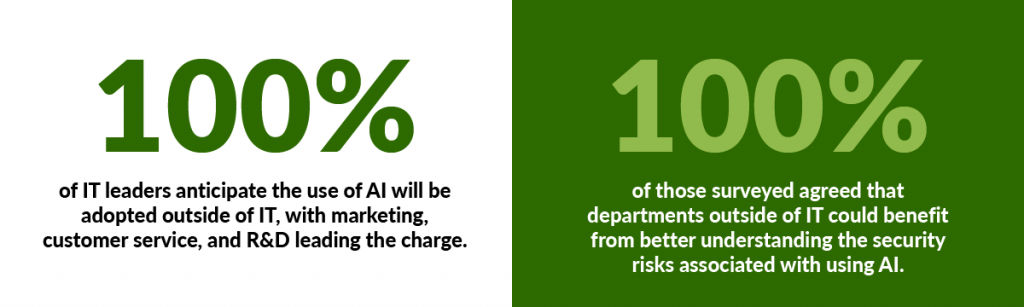

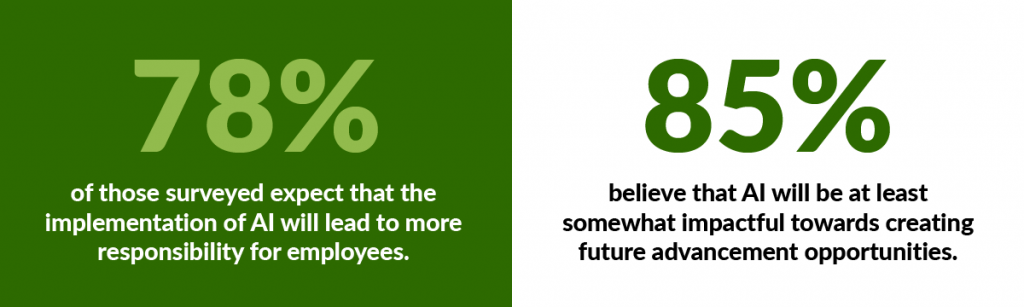

Juniper Networks recently worked with Wakefield Research to survey 1,000 global executives who are involved with AI and machine learning at their organization. The findings underscore very real challenges across companies and industries that we need to navigate. Just consider this snapshot of several findings:

- 87% of respondents say it may not be possible to know if their company’s AI output is accurate.

- 89% say employees trust AI more than they should.

- 87% of respondents stated they felt rushed to implement the technology.

- 78% expect that the implementation of AI will lead to more responsibility for employees.

All that said, AI feels very different from other breakout technologies of recent decades, such as cloud, Internet of Things (IoT), and mobile.

AI is not just about implementing a new tool or application for efficiency; it’s also about analyzing the impact it may have on their entire organization. The fear of the unknown and the uncertainty of the consequences make AI adoption a much more complex and thought-provoking challenge for CIOs than most previous technology breakthroughs.

But I’m here to say AI doesn’t have to be this complicated or scary.

Given the considerations and challenges IT leaders everywhere are currently being confronted with, I thought it would be helpful to share some illuminating survey findings along with my take on how to best navigate the uncertainties associated with AI adoption.

Pressure to implement AI

This isn’t a surprise, but it’s a good reminder of just how much pressure CIOs and technology leaders are feeling. In 2023, the flood of generative AI solutions made the possibilities of AI more tangible while large language models (LLMs) made AI more accessible. CIOs and business leaders are on their toes because they know if the hype is true and if they don’t move quickly, they risk being at a competitive disadvantage from OpEx, productivity, and speed perspectives. While the urgency is palpable, it’s important to find ways to proceed cautiously so you don’t risk being left behind. Start safe, where scale is manageable as you need to tune for accuracy and iterate quickly. Maybe even place some reasonable bets trying things that weren’t possible before – you’re no worse off than you were before, but at least you’ll be learning in the process.

Perceptions about AI accuracy

Much of this fear and doubt is driven by near-universal concerns regarding the input being used as source AI material. Ever since OpenAI launched ChatGPT, the industry has raced to catch up and generative AI has had an outsized impact on the way people perceive AI in general. It’s largely due to the media’s focus on things like AI hallucinations and the inescapable bias in the content used to train the LLMs.

But there’s more to the story.

Of course, when you are considering a generative AI solution that relies on scraped data, it’s essential to tease apart different classes of problems to consider the potential for bias. Training these kinds of LLMs also requires leaders to look at how the data will be consumed into the model, how the AI is tested and who is using the models once complete. There are techniques like retrieval augmented generation (RAG) that allow you to turn the model to answers you know are accurate as well as human-in-the-loop solutions that can help ensure accuracy.

Specialized AI and machine learning solutions that depend on more reliable data are a different matter. In Juniper’s AI-Native Network, for example, we rely on data that comes from ongoing operations, so we know it’s accurate and arguably biased in a good way.

Employee trust in AI outputs

Interestingly, one challenge many see in the emerging world of AI is the blind faith their employees seem to have in the emerging technology. Fortunately, addressing trust-related concerns is relatively straightforward. Leadership needs to prioritize training and education that equips employees with the knowledge they need to make informed decisions about safely using AI in high- vs. low-stakes situations.

Employees unknowingly relying on software trials or easily purchased tools with embedded AI is arguably more concerning for enterprises than many hallucination or bias scenarios. Ultimately, appropriate education and training on the different layers of concerns within AI use is essential.

Rapid deployment vs. policy considerations

When you consider how fast solutions are evolving and what they are capable of, it’s understandable why the push for rapid onboarding of AI is creating a tension point in many enterprises. It’s also understandable why policies for such powerful technology are often a sticking point.

When you consider how fast solutions are evolving and what they are capable of, it’s understandable why the push for rapid onboarding of AI is creating a tension point in many enterprises. It’s also understandable why policies for such powerful technology are often a sticking point.

Keep in mind, however, that you don’t have to completely reinvent the wheel when it comes to AI and company policies. Instead, it’s more worthwhile to think about policies in terms of an evolution versus a revolution. For example, most companies already have clear policies on what data employees can or can’t share with third parties. In many cases, it may be possible to simply restate policies in clear terms noting that they also apply to external generative AI tools. Remember to also consider software purchase policies and add addendums for additional reviews for any solutions with embedded AI.

Shadow IT concerns

Based on what I’ve seen over the years, these numbers reflect a new iteration of the ongoing shadow IT challenge. As with any shadow-IT related concern, governance is the key. It doesn’t have to be a huge effort involving cross-functional leaders in every purchase and deployment decision. It also doesn’t mean just saying no. It means making sure you ask the right questions and rely on the right people for specific use cases. Especially for anything pertaining to sensitive information.

With an AI-driven HR application, for example, you would need to go through a bias exercise based on any applicable laws. In this case, it would be important to pull in legal and HR experts to ensure the solution is up to standards. When it comes to sensitive areas, it’s especially important to consider vendor sophistication, including their approach to AI, and their track record with previous solutions.

Workforce disruption

It’s undeniable that AI, like any new disruptive technology, will eventually replace people for many kinds of work. But it’s also inevitable that AI will lead to new skills requirements and jobs. To get ahead, I’d argue that it’s important to enhance skills related to responsibilities that can’t be handled with statistics — while generative AI manifests itself in natural language, it’s still largely statistics.

And don’t forget that the ability to make yourself and those around you more effective using AI tools has rapidly become a sought-after, marketable skill. People who stay engaged and keep enhancing their skills will have the most opportunities.

What will 2024 reveal?

While the concept of AI has been around for over 70 years, it’s only been over the last few years that it’s broken through into the mainstream consciousness. 2023 marked the watershed moment where AI moved from the realm of sci-fi to being a baseline business requirement. Hype and hand-wringing aside, one thing is certain: 2024 will be a year we learn a lot about how dramatically AI is likely to reshape the world.

In the meantime, we absolutely need to be thinking about where AI makes sense and prioritizing high-impact, low-risk solution areas, such as networking, to lay the foundation for ongoing success with AI.

Want to learn more about Juniper’s AI-Native Networking Platform? Take a deep dive into what makes Juniper’s AI-Native Networking Platform tick, and how it delivers better and more personalized experiences for operators and end users.