At Juniper Networks, we have been leading the data center design conversation for many years. From Spanning Tree Protocol (STP or xSTP) and MC-LAG to leaf-and-spine IP fabrics and network virtualization with EVPN-VXLAN, we have played a key role in establishing the standards-based architectures that power data centers around the world. And when we’re not spending time defining new architectures and building next-generation platforms, we’re busy adding functionality into our products that provide better control and the ability to optimize, or tune, the data center environment. Let’s dive into what we’ve been working for our QFX Series platforms.

Simplified interconnect for large scale DC fabric infrastructures with seamless EVPN-VXLAN tunnel stitching

Enabling larger EVPN-VXLAN data center infrastructure can be a scaling challenge, requiring additional tools to fully control the way workloads communicate across fabric pods or DC sites. Starting with Junos OS release 20.3, seamless EVPN-VXLAN stitching offers a method to interconnect pods and sites at their edges that provides improved control and scaling.

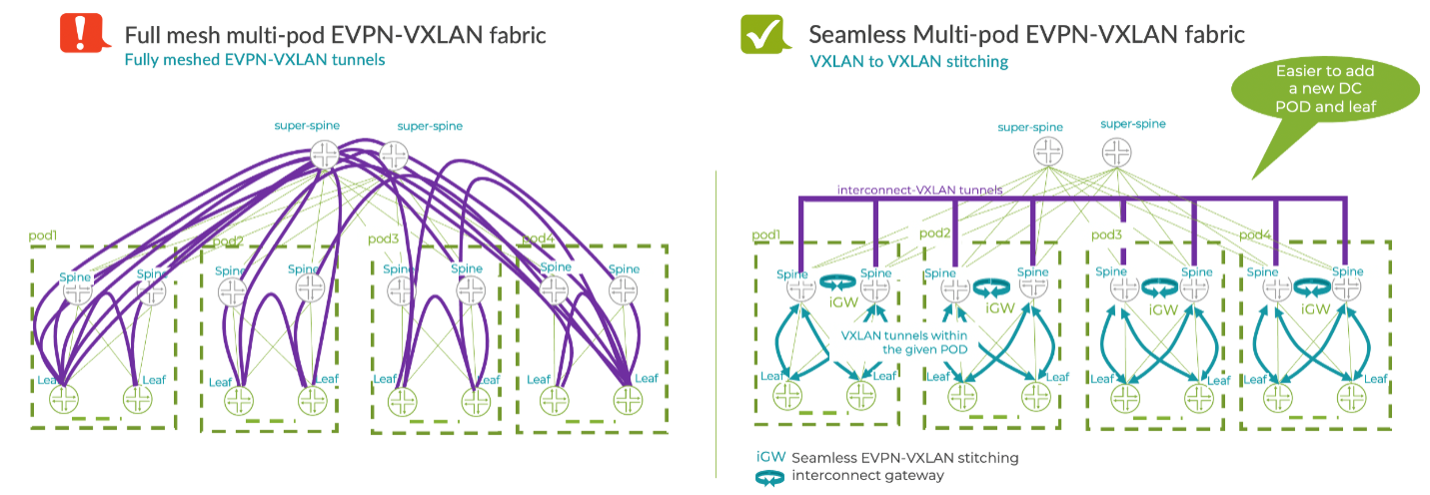

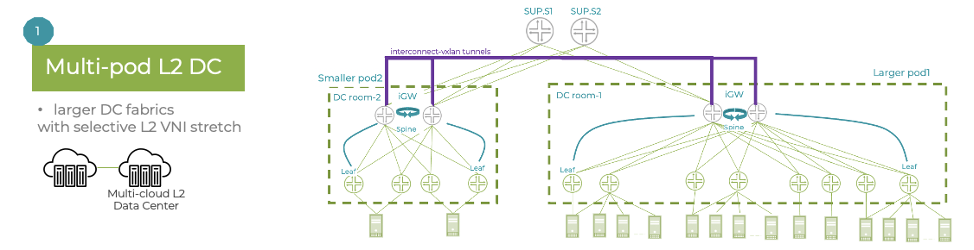

The figure below shows a data center with four pods. The left side shows an approach that interconnects pods using a full mesh of leaf-to-leaf VXLAN tunnels. The right side uses EVPN-VXLAN stitching, where the intra-pod tunnels terminate at their local interconnect gateway and then just a few VXLAN tunnels are used to interconnect the pods. In this example, tunnel stitching happens at the spine layer, however it can also be done at the super-spine or border-leaf layer, depending on the DC design.

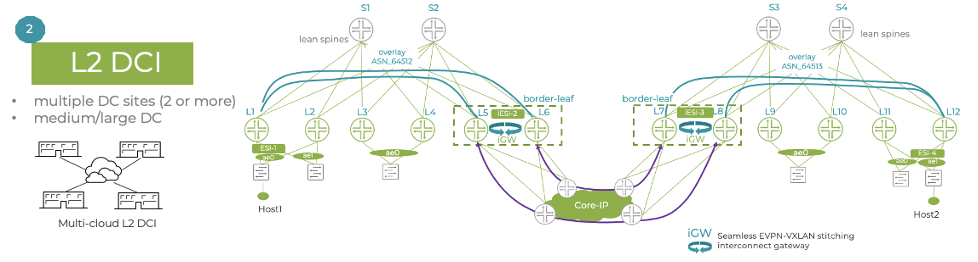

Seamless EVPN-VXLAN stitching has two main use cases:

Multi-pod DC fabric architectures – the interconnect gateways are placed at the spine layer, unifying scaling between the two pods.

Data center interconnect (DCI) – instead of using an over-the-top (OTT) full mesh between sites, the interconnect gateways create the DCI interconnect VXLAN tunnels, thus reducing the number of tunnels and next-hops.

Seamless EVPN-VXLAN stitching simplifies Layer 2 DCI and multi-pod architectures by providing clear demarcation points between pods and sites, thereby enabling improved flood control. As a result, this solution offers better overall scaling.

Improved virtualization and multitenancy with MAC-VRF

Leveraging and implementing virtualization and multitenancy in the data center can be complex, requiring multiple touch points in the architecture to see the first benefits of virtualization.

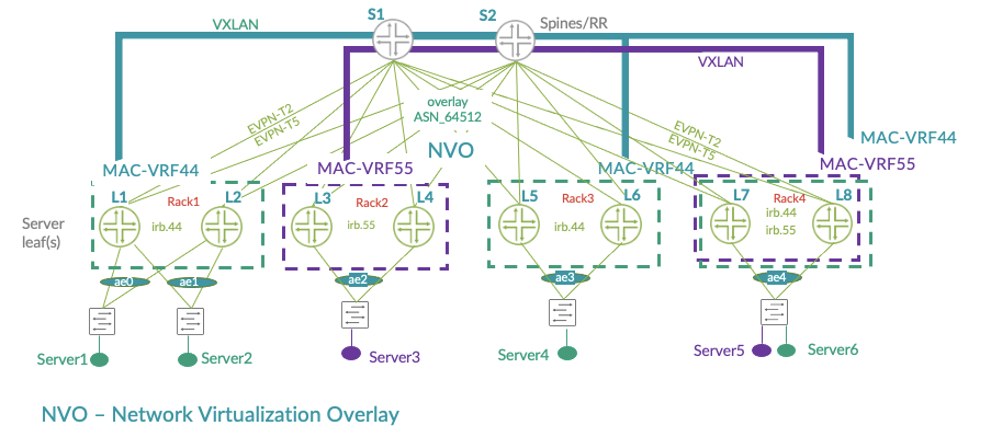

A new routing instance type, MAC-VRF, adds more flexibility when enabling new server connectivity within the fabric. And with support for edge-routed bridging (leaf routed) and bridged overlay (routed outside the fabric) architectures, MAC-VRF offers a consistent approach to enabling L2 services.

In the figure below, Tenant 44 (MAC-VRF44) and Tenant 55 (MAC-VRF55) are using dedicated MAC-VRF Layer 2 instances on the leaf devices, enabling them to be fully isolated from each other. In cases where these tenants want to communicate, they can add dedicated EVPN Type-5 Layer 3 instances (not shown) to interconnect. This provides the tenants with a range of options to support both their isolation and collaboration needs.

Overall, the MAC-VRF provides additional capabilities for network virtualization and multitenancy. It also offers better control of VXLAN tunnel distribution as well as VXLAN tunnel distribution and flooding optimizations. Plus, it enables interoperability with other vendors.

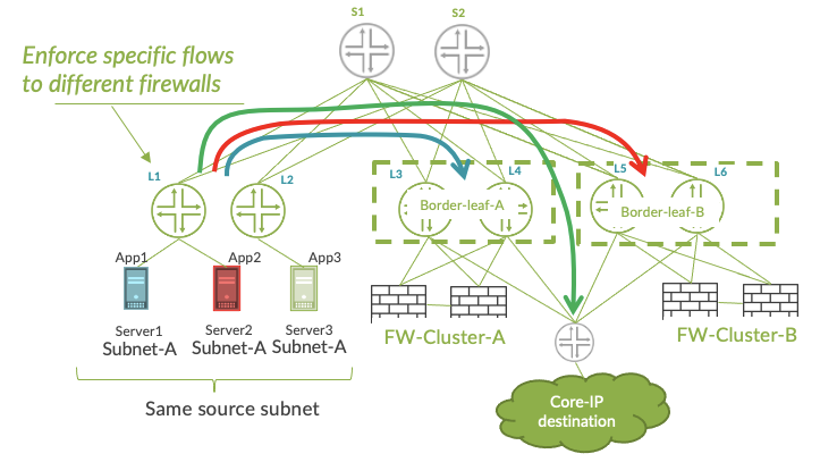

Application awareness and traffic steering with filter-based forwarding

Not all traffic is equal. When deploying applications in a data center, some applications require more special treatment than others, whether it’s due to how much we trust their traffic or because of the volume of traffic they generate. When these applications are located within the same subnet it can be challenging to provide differentiation. Something is needed to identify and separate each application’s traffic.

Filter-based forwarding (FBF) can help. FBF may not be new, but applying it to edge-routed bridging architectures injects more intelligence into the DC fabric. FBF on QFX5120 leaf nodes enables the operator to forward each application’s traffic as they wish. This makes it possible to enable app steering during a specific time of day, or if a particular app/server begins to show suspicious behavior from a security point of view.

In the figure below, three servers have been deployed in the same IP subnet and by default, their traffic will all be treated the same. However, each server’s traffic has different characteristics: App1 is creating a lot of ‘elephant’ traffic; App2 has low volume but its traffic needs to flow through a specific firewall cluster for more advanced policing; and App3 generates lots of traffic but it’s fully trusted so can flow directly out to the core IP network.

Using filter-based forwarding at the leaf layer adds application awareness of the data center fabric. It also improves load balancing and flow engineering capabilities and offers improved flow isolation.

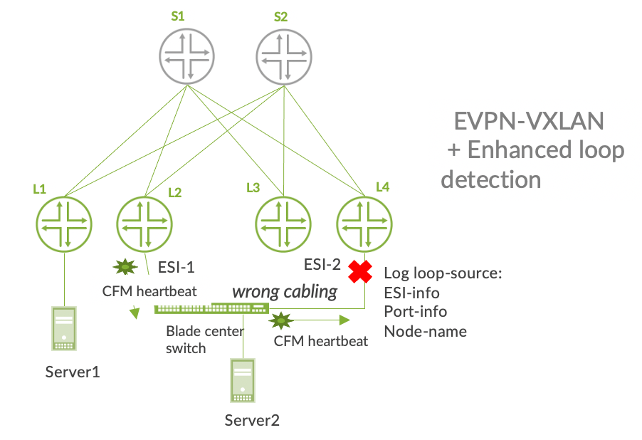

Improved fabric hardening with enhanced Ethernet loop detection

Modern data center EVPN-VXLAN fabrics have eliminated many of the challenges of traditional 3-tier architectures, such as loop detection. One such challenge is loop detection and prevention. Leaf-spine architectures use all-active link designs and EVPN includes several built-in mechanisms (split horizon, designated forwarder election, MAC mobility tracking) that lower the risk of network instability, compared to legacy Spanning Tree-based infrastructures.

Still, loops can happen when server-to-leaf connections are mis-cabled or misconfigured. Since uptime is a critical metric for any data center, many vendors still recommend using STP. Yes, STP in a modern DC! Fortunately, there’s a better way.

Starting with Junos OS release 20.4, the QFX5120 supports connectivity fault management (CFM) into the DC fabric. Based on the IEEE 802.1ag standard, CFM’s heartbeat mechanism provides enhanced Ethernet loop detection over legacy options like xSTP and BGP. But that’s not all. Through information-sharing within the QFX platform, EVPN can provide information to CFM TLVs like node name, port name and ESI information to help identify the source of the problem.

In the figure below, Server 2 is connected to leaf devices L2 and L4. Both leaf devices are using the same trunk-level VLAN ID. however they have accidentally been configured to use different ESI values. This could create an Ethernet loop. But thanks to CFM heartbeats, the loop has been blocked. Plus, because CFM TLV extensions include details about the problem, the origin of the loop can be identified.

This solution represents a more elegant approach for loop detection within an EVPN-VXLAN fabric, truly eliminating the need for legacy loop detection solutions like xSTP. It also reduces loop detection times and enhances visibility into the cause of the issue, thereby reducing time to resolution.

Join us on March 17th at 12PM EST for Masterclass #4: EVPN-VXLAN Data Center to get into more detail on these topics.