Artificial Intelligence (AI) and High-Performance Computing (HPC) are currently experiencing significant growth driven by advancements in machine learning, natural language processing, generative AI, robotics, and autonomous systems.

At the core of these innovations are large-scale distributed training models, often comprising billions—or even trillions—of parameters spread across multiple GPUs. During the training process, these nodes exchange substantial amounts of data and gradients to synchronize using the backend AI Ethernet fabric. However, packet loss can severely degrade synchronization, resulting in retransmissions or communication stalls, ultimately leading to increased latency, longer job completion times (JCT) and inefficient use of expensive GPU resources.

The challenge: silent packet drops in AI data center fabric

JCT is a crucial metric, and modern AI workloads, particularly large-scale training and inference tasks, rely on tight synchronization across clusters. Even a single lost packet can significantly impact performance and increase operational costs.

For example, RoCE v2 packets may get dropped in the AI Ethernet/IP fabric when switch buffers overflow due to traffic congestion. These lost packets must be retransmitted, causing delays and interrupting the flow of training or inference.

While Explicit Congestion Notification (ECN) signals congestion by marking bits in the IP header, it does not identify which specific packets were dropped due to congestion and so need to be re-sent.

The solution: Drop Congestion Notification (DCN)

To address this, Juniper has introduced Drop Congestion Notification (DCN), a new congestion management capability available in the 23.4x100d40 Junos OS(TM) Evolved software release for Tomahawk 5 chip-based QFX5240-OD and QFX5240-QD—64 x 800GbE Ethernet/IP platforms.

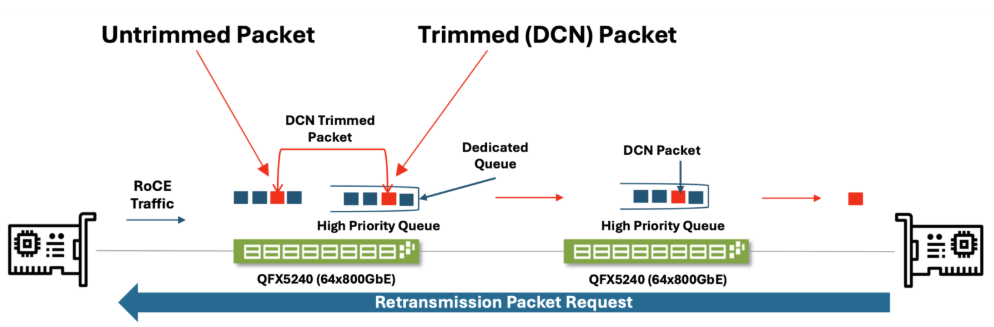

When congestion occurs, the switch sends notifications of dropped packets by reducing the packet payload and forwarding this information to the receiving host via a high-priority queue. Transit switches in the network fabric recognize these trimmed DCN-marked dropped packets and direct them to the high-priority queue.

As a result, the end host must process the trimmed DCN packets, determine which packets were dropped explicitly due to congestion, and promptly request retransmission of those lost packets from the source.

The trimmed packets, however, are not sent to the target server’s memory but instead used to identify precisely which packets require selective retransmission—this helps to avoid a longer default retransmission process and, consequently, achieve better end-to-end latency for job completion.

The following figure illustrates a simplified topology where packets entering the first switch, in the event of extreme congestion (over the ECN thresholds), are not dropped but are instead trimmed and sent to the target GPU server NIC card. While the first switch takes the trimming action, the transit switches may also recognize the trimmed frame and place it immediately on the outgoing interface via the high-priority queue. Once the trimmed packet reaches the target NIC card, the retransmission packet request is sent to the originating server.

Figure 1: Drop Congestion Notification (DCN)

In the QFX5240-OD and QFX5240-QD switches, DCN-related packets are processed through a dedicated queue that is separate from data packets. This separation allows the user to manage the latency and bandwidth assigned to DCN packets more effectively.

Example configs:

- Config to set the custom L4 udp port number as DCN protocol number, which the switch will use to identify the DCN packets. This is a MUST configuration to enable DCN.

set class-of-service drop-congestion-notification udp-port <0.65535>

- Config to set the egress queue for all DCN drop packets. This will be a global config. The queue should be one of the unicast queues. The user is expected to configure this queue as strict-high priority. It is also recommended to use the queue only dedicated to the DCN. Common with other COS features, the queue is specified using “forwarding-class”. This is also a MUST configuration to enable DCN.

set class-of-service drop-congestion-notification forwarding-class <fc name>

- For QFX5240 to act as DCN transit alone, the above two configurations (protocol and forwarding-class) are sufficient. Here “transit” refers only to identifying DCN packets and transmitting in high priority queue. For QFX5240 to create DCN drop packets in the event of congestion, individual port level DCN config below is needed, along with the above configs.

- Config to enable DCN on individual ports. When DCN is enabled on the port, if any DCN data traffic ingress on the port gets dropped in MMU due to congestion, it will trigger DCN drop packet.

set class-of-service interface <ingress-interface-name> drop-congestion-notification

- If the user wants to enable DCN on all the ports, then they must use a wildcard to apply the above configuration.

set class-of-service interface et-* drop-congestion-notification

- DCN-enabled configuration is supported only on a physical interface, and on the AE parent.

set class-of-service drop-congestion-notification forwarding-class dcn set class-of-service drop-congestion-notification udp-port 13742 set class-of-service interface et-0/0/0 drop-congestion-notification

Show command for DCN drop packet stats

root@QFX5240# run show interfaces queue et-0/0/0 forwarding-class dcn Physical interface: et-0/0/0, up, Physical link is Down Interface index: 1205, SNMP ifIndex: 503 Forwarding classes: 12 supported, 9 in use Egress queues: 10 supported, 9 in use Queue: 7, Forwarding classes: dcn Queued: Packets : 0 0 pps Bytes : 0 0 bps Transmitted: Packets : 0 0 pps Bytes : 0 0 bps Tail-dropped packets : 0 0 pps Tail-dropped bytes : 0 0 bps RED-dropped packets : 0 0 pps RED-dropped bytes : 0 0 bps ECN-CE packets : 0 0 pps ECN-CE bytes : 0 0 bps

Conclusion:

Maintaining consistent performance and synchronized operations is critical in AI Ethernet fabrics, especially as workloads scale across distributed GPU clusters. DCN addresses a key gap by providing real-time visibility into packet drops during severe congestion. By notifying endpoints of dropped packets, DCN enables faster recovery, minimizes hidden delays, and helps preserve AI JCTs.

Ultimately, DCN bridges the visibility gap between the network fabric and AI workloads, making it a vital capability for building scalable, high-performance AI infrastructure.

Please refer to the user guide for more information about the AI/ML Data Center features.