Experience-First Networking for 5G

5G and Open RAN bring new challenges for communication service providers (CSPs) as new x-haul architectures emerge and more cell sites are required to maintain coverage and provide seamless service to users. New 5G-only sites will likely be a mix of centralized RAN (C-RAN) and distributed RAN (D-RAN) topologies. C-RAN sites are typically large sites owned by the carrier, much like 4G sites today. D-RAN sites, on the other hand, can number in the tens of thousands, are often small, leased spaces, such as cabinets underneath cell towers. Using small, leased spaces keeps costs low for a CSP and maintains business flexibility – but not without constraints. Typically, a D-RAN site has limited power, space and cooling. Therefore, all the compute and routing functions must be combined in a single 1U or 2U server. Furthermore, connectivity is often limited to leased lines for transit back to the mobile core. To operate within these constraints, CSPs are taking novel approaches in the D-RAN, and Juniper Networks has developed a cloud-native router for these situations.

Cloud-Native Routing

In the cloud-native approach to software, functional blocks are decomposed into microservices which are deployed as containers on x86 or ARM platforms, orchestrated by Kubernetes (K8s). This includes 5G core control plane functions such as AMF and SMF, RAN control plane functions such as central unit control plane (CU-CP), SMO, near-real-time & non-real-time RIC and some data-plane functions, such as central unit data plane (CU-DP) and DU.

K8s networking occurs via container network interface (CNI) plug-ins. But the networking capabilities of typical CNIs are rather rudimentary and not fit for purpose when the network functions that the CNI serves play pivotal roles within a telco network. This is where Juniper Networks’ cloud-native router (J-CNR) shines. J-CNR is a containerized router that enables an x86 or ARM-based host to become a first-class member of the network routing system, participating in protocols such as ISIS and BGP and providing MPLS/SR based transport and multi-tenancy. In other words, rather than the general compute platform being just an appendage to the network like a customer edge (CE) router, it can fit the role of a provider edge (PE) router. When deployed at a cell site, this solution reduces CapEx by reducing the number of boxes needed. It also reduces OpEx through corresponding reductions in power consumption, space utilization and operational complexity.

Cloud-Native Routers vs. Conventional Routers

Every router has a control plane and a forwarding plane. The control plane participates in dynamic routing protocols and exchanges routing information with other routers in the network. It downloads the results into a forwarding plane in the form of prefixes, next-hops and associated SR/MPLS labels. In Juniper’s routers, including J-CNR, the control plane is agnostic to the exact details of how the forwarding plane is implemented. In traditional hardware routers, such as the Juniper MX Series routers, the forwarding plane is based on a custom ASIC. Although the J-CNR forwarding plane is based on different constructs, the routing protocol software, known as Routing Protocol Daemon (RPD) in Junos, is identical in both cases. Therefore, J-CNR benefits from the same highly comprehensive and robust protocol implementation as our hardware-based routers that underpin some of the world’s largest and most important networks. As a result, J-CNR delivers high-performance WAN networking in a small footprint software package without compromising on features.

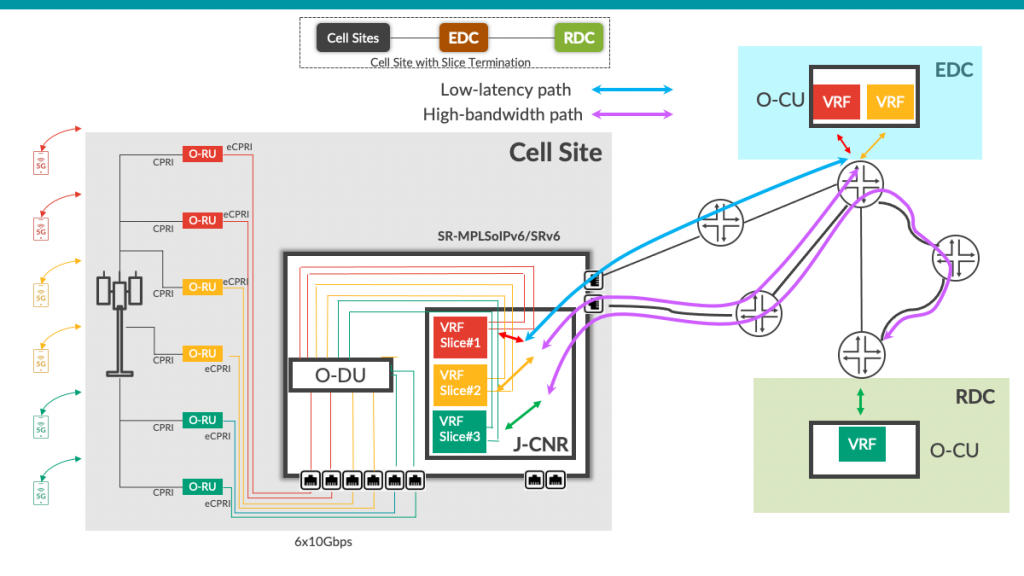

Juniper Cloud-Native Router within O-RAN

J-CNR is designed for D-RAN, or single-split architectures where the RU and DU are co-located and connected to the CU through a relatively low-bandwidth midhaul link. Most 5G/O-RAN D-RAN architectures evolve from an existing, single previous generation cell site. This new deployment extends to multiple smaller sites to achieve denser coverage, higher bandwidth and lower latency.

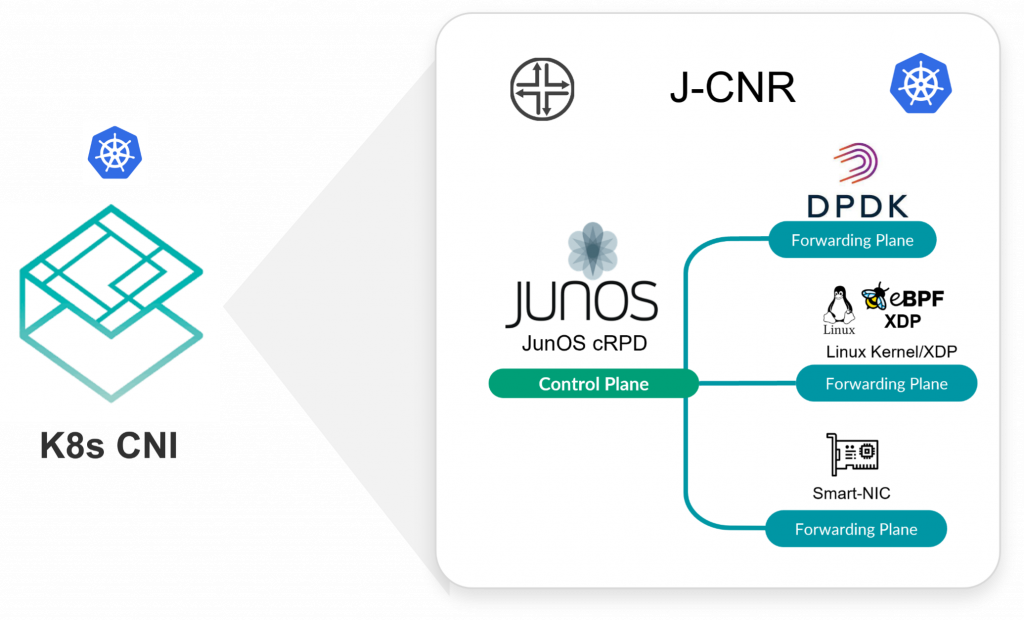

As illustrated in Figure 1 above, J-CNR uses a Junos containerized Routing Protocol Daemon (cRPD) control plane and a forwarding plane implemented through DPDK, Linux Kernel or Smart-NIC. The complete integration delivers a K8s CNI-compliant package, deployable within a Multus-enabled K8s environment. The DU and the J-CNR can co-exist on the same 1U size x86 or ARM-based host, which is particularly attractive for sites with limited power and space because it eliminates a two-box solution in the form of a separate DU and router.

J-CNR Architecture

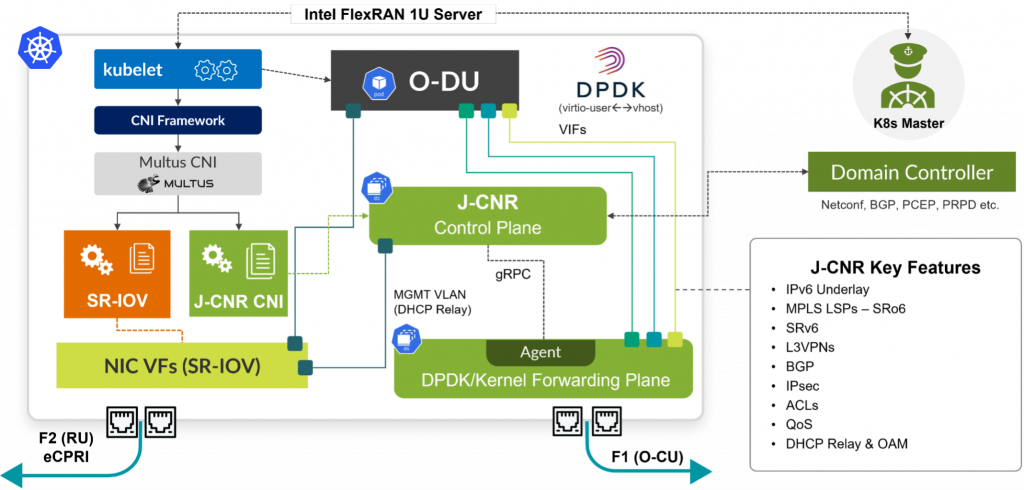

Figure 2 shows the details of the J-CNR architecture. The cell site server is a K8s worker node, and the functional blocks in green are provided by Juniper Networks. The O-DU requires multiple network interfaces, facilitated by the Multus meta-CNI. Each of these interfaces can be mapped into a different Layer 3 VPN on the J-CNR to support multiple network slices. The J-CNR CNI dynamically adds or deletes interfaces between the pod and the J-CNR forwarding plane when triggered by K8s resource creation events. It also dynamically updates the J-CNR control plane cRPD container with host routes for each pod interface and corresponding Layer 3 VPN mappings in the form of route distinguishers and route targets. cRPD programs the J-CNR forwarding plane accordingly via a gRPC interface, which introduces the cloud-native router into the data path, supporting the F1 interfaces to the CUs running in edge or regional DC sites. Although a single O-DU workload is shown in Figure 2 above, in practice, multiple O-DUs, or other workloads, can be attached to the same cloud-native router.

Revolutionizing Network Operations

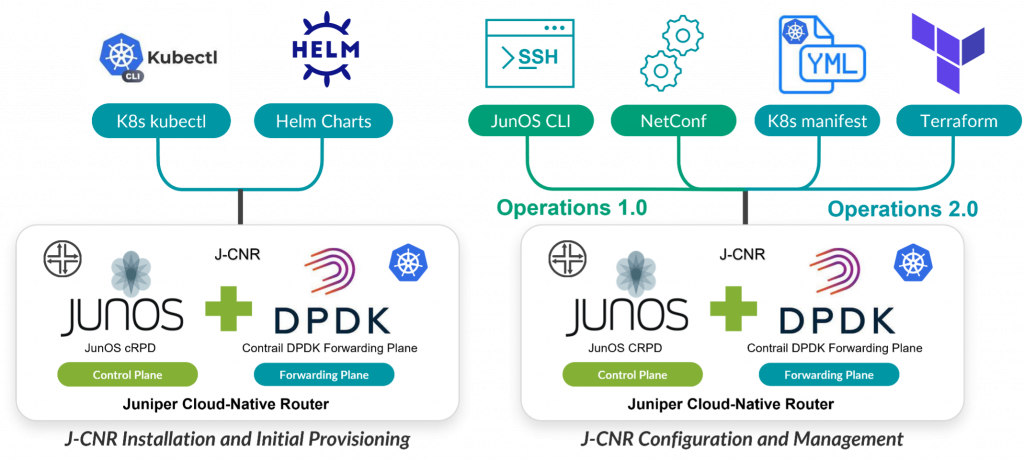

As the Juniper CNR is a cloud-native application, it supports installation using K8s manifests or Helm Charts. These include the initial configuration of the router, including routing protocols and Layer 3 VPNs to support slices. It’s spun up in a matter of seconds with all the routing protocol adjacencies with the rest of the network up and running. As illustrated in Figure 3 below, ongoing configuration changes (i.e., adding or removing network slices) during the lifetime of the J-CNR are made via a choice of Junos CLI, K8s manifests, NetConf or Terraform.

Using a containerized appliance instead of its physical counterpart would normally incur some operational overhead, but J-CNR mitigates this by using a K8s CNI and Operator Framework. By exposing the appropriate device interfaces, J-CNR normalizes the operational model of the virtual appliance to the physical appliance, facilitating adoption within the operator’s network operations environment. The J-CNR also provides the same features, capabilities and operational model as a hardware-based platform, so it’s familiar in look and feel to existing Juniper-trained operations teams. Likewise, a domain controller can employ the protocols it supports with any other Junos router to communicate with and control J-CNR, including Netconf/OpenConfig, gRPC, PCEP and Programmable Routing Protocol Daemon (pRPD) APIs.

Role of the Node Within the Routing System

Within the network routing system, the node participates in ISIS or OSPF. In addition, MPLS is used, often based on Segment Routing (SR). The reason for this is two-fold: to allow traffic engineering (if needed) and to provide MPLS-based Layer 3 VPNs. Alternatively, SRv6 could be used to fulfil these requirements. A comprehensive routing capability is also necessary to implement network slicing. Each slice is placed into its own Layer 3 VPN and J-CNR acts as a PE from the Layer 3 VPN point of view. It, therefore, exchanges Layer 3 VPN prefixes via BGP with other PE routers in the network, regardless of whether those PEs are physical routers or cloud-native routers residing on other hosts. Each slice is placed in a separate Virtual Routing and Forwarding (VRF) table on each PE, giving the proper degree of isolation and security between slices, just like a conventional Layer 3 VPN service. This capability offers a solution to a common problem where K8s doesn’t natively provide such isolation.

Usually, a transport network offers a variety of paths, each tuned to a particular cost function, such as minimum latency or high bandwidth. These are implemented using SR flex-algo, RSVP or SR-based traffic engineering. When traffic engineering is used, paths can be computed by Juniper’s Paragon Pathfinder controller and communicated to the J-CNR via PCEP. When Pathfinder detects congestion in the network via streaming telemetry, it automatically recomputes the affected paths to ease the congestion. PE routers, including J-CNR, apply tags (BGP color communities) to the prefixes in a given VRF according to the type of path that the corresponding slice needs. For example, in Figure 4 below, the red slice needs the lowest latency transport possible, so it’s mapped to a blue low-latency path to reach the O-CU in Edge Data Center (EDC). The yellow and green slices need high bandwidth, so their traffic is mapped to the purple high-bandwidth paths. In actual deployments, in which there will be many more slices than shown in the diagram, the mapping of slices to a transport path will normally be many-to-one. For example, all of the slices that need low-latency transport between a given pair of endpoints share the same low-latency traffic-engineered or flex-algo path that connects those two endpoints.

Cloudifying Telco Networks for 5G and Beyond

Juniper’s Cloud-Native Router brings the full spectrum of world-class Junos routing capabilities to compute platforms that host containerized network functions. This allows the platform to fully participate in the operator’s network routing system and facilitate multi-tenancy and network slicing. J-CNR also has a familiar look-and-feel and provides a consistent operational experience with the same control plane interfaces as a Junos hardware-based router. Network operators gain the transformational benefits of a cloud-native networking model while maintaining operational consistency with the existing infrastructure, which means lower OpEx, faster speed of business and carrier-grade reliability.

Learn More

Visit Juniper Networks at Mobile World Congress 2022 Barcelona, February 28 – March 3, 2022

For additional information, check out this episode of the Get Connected podcast with Chris Lewis, where Juniper’s Philip Goddard, Director of Product Management – Software Transformation, shares what goes into building a cloud-native Juniper router. Also read how cloud-native routers are transforming the economics of distributed networks in this blog by Mathias Kokot, VP, PLM Software at Juniper Networks.