5G technology offers the potential for ubiquitous connectivity and high-bandwidth capabilities, unlocking a plethora of new applications that were previously unattainable on older network generations.

Nevertheless, the wireless spectrum used for these advanced applications has limited range, necessitating the deployment of smaller cell sites and positioning of radio equipment closer to user devices. This results in network operators needing to expand their cell site infrastructure, leading to significant increases in capital and operational expenditures.

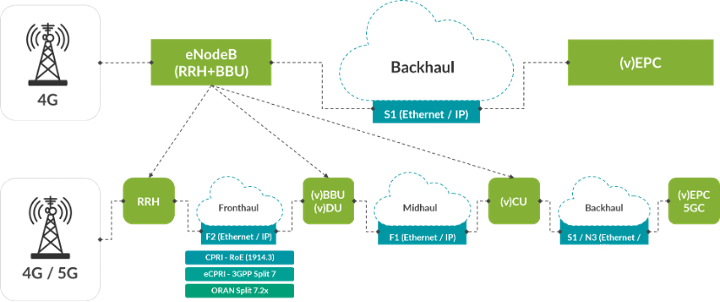

The traditional centralized RAN approach becomes impractical in this scenario and calls for a disaggregated strategy that offers improved cost efficiency. Under this approach, all of the 5G functions, starting from the distributed unit (DU), are implemented as virtual functions or, more recently, as containerized functions deployed using Kubernetes. Only the necessary DU functionality is retained at the cell site, while the centralized unit (CU) and user plane function (UPF) functions are relocated to a centralized location like a data center. However, latency considerations dictate that the CU functions must be within a certain proximity, typically limited to around 80 kilometers (with a target latency of 1 millisecond). This requirement prompts establishing smaller edge data centers to accommodate these CU functions effectively.

Instead of building out private edge data centers, operators are opting for public cloud services due to their cost effectiveness and ability to auto-scale the functions based on network demand. Further, mobile network operators are expanding their existing infrastructure to accommodate 5G packet core in the public cloud. To enable this, the core routing functions like unified data management (UDM), network slice selection function (NSSF) and network repository function (NRF) must be containerized and moved into the public cloud. With slicing functions offering slices based on factors like functionality, performance, user equipment (UE) groups and more, these cloud-native network functions (CNFs) need to be elastically scaled up or down – and dynamically – using load balancer services. Service providers can also take advantage of the inherent redundancy capabilities provided by the public cloud and distribute their functions across different geographical zones in an active-standby mode. They expect seamless connectivity, low latency and high throughput from their on-prem data center to the core functions running in the cloud, including seamless switchover to a different geographical zone in case of natural calamities/failures. Traditional public cloud offerings simply don’t provide these network services required to support 5G.

The lack of a suitable software router that can be deployed in the cloud is one of the main challenges that’s preventing the deployment of 5G in public cloud environments. A telco-grade router is needed to provide seamless connectivity between 5G workloads in the cloud and the Radio Access Network (RAN) and 5G mobile core functions. This telco-grade router must offer rich functionality, such as BGP, overlays like EVPN-VXLAN, advanced routing policies and dynamically signaled tunnels such as MPLS {GRE,UDP, IP} and ECMP. It should also be cloud-native, meaning it should be auto-scalable, integrate with Kubernetes and have high forwarding performance.

The Juniper Cloud-Native Router – Facilitating 5G Deployments

The Juniper Cloud-Native Router is the industry’s first software router that is purpose-built to target these use cases for cloud-native environments. It combines the containerized routing protocol process (cRPD) and a DPDK-enabled forwarding plane to provide the performance, scalability and flexibility needed for 5G deployments in cell-site, edge and public cloud environments.

Juniper Cloud-Native Router for Virtual Private Clouds (VPCs)

The Juniper Cloud-Native Router solves the problem by meeting both service providers’ and cloud providers’ needs. It’s built upon Juniper’s proven Junos OS routing capabilities to augment public cloud networking and deliver the required functionality while integrating seamlessly into the public cloud infrastructure. It uses the industry-leading Contrail DPDK vRouter as its data plane to accelerate the packets processed per second (PPS) and deliver the required packet performance in cloud environments, providing the best of both worlds.

Within AWS and other public clouds, service providers use virtual private clouds (VPCs). These are virtual networks similar to traditional networks operated by service providers in their data centers. VPCs can have one or more subnets, each with its own range of IP addresses and different types of gateways to connect them to other networks. For example, an internet gateway connects a VPC to the internet, a NAT gateway connects a private subnet to the internet using network address translation and a transit gateway is a network transit hub used to interconnect VPCs and on-prem networks. In AWS, each subnet can be created in an availability zone (AZ). AZs are distinct locations within an AWS region that are engineered to be isolated from failures in other AZs. They provide inexpensive, low-latency network connectivity to other AZs in the same AWS region.

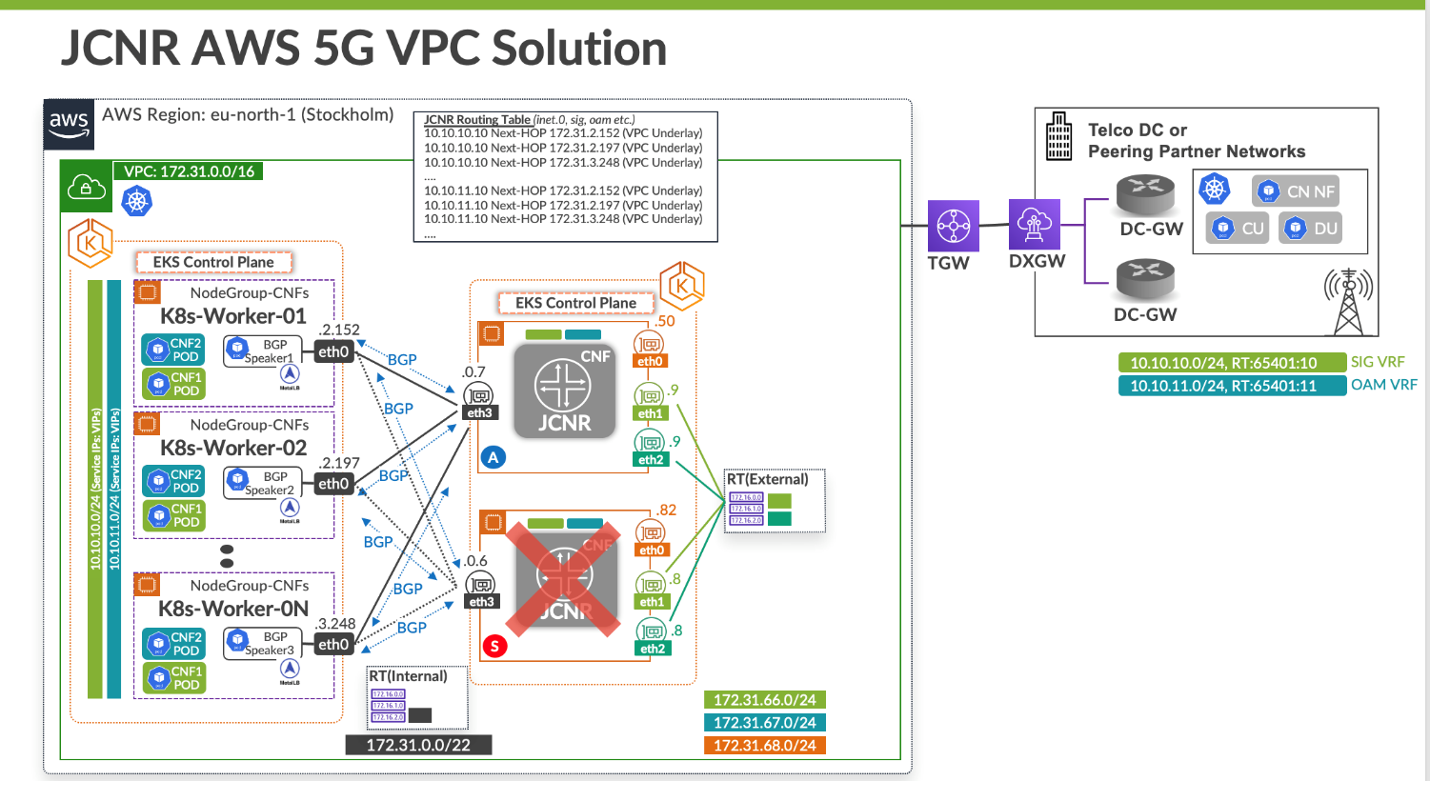

The Juniper Cloud-Native Router can extend the 5G core infrastructure running on-premises to AWS VPCs. Here, different services like NSSF, NRF and UDM, each with their own service IP addresses, are deployed as pods in an AWS elastic Kubernetes service (EKS) cluster. These services are deployed in a scale-out fashion, i.e., pods for each service are replicated across multiple nodes in the EKS cluster. While each pod has a unique pod IP address(es), a common service address (or service VIP) is shared by all pods of a given service. For operational and security reasons, traffic for each service to/from the on-prem must be separated and delivered using corresponding routing contexts. To achieve this, each service is mapped to a virtual routing and forwarding (VRF) (e.g., secure internet gateway (SIG) VRF, operations, administration and management (OAM) VRF on the Juniper Cloud-Native Router, which provides seamless communication with their counterparts, like CUs running on-premises, with full isolation. The Cloud-Native Router is deployed in a separate EKS cluster, connected to the 5G CNFs through a shared VPC. For redundancy, additional instances of Cloud-Native Router can be deployed in active/standby mode. The service IP addresses are the EKS cluster endpoints accessible only inside the VPC. To make the service IP prefixes reachable from on-premises network and vice versa, nodes running 5G CNF workloads use a boarder gateway protocol (BGP) session with the Cloud-Native Router and advertise service prefixes (VIPs).

These BGP implementations are lightweight and may lack capabilities to process incoming BGP advertisements to update the forwarding state on the node. In some scenarios, such as UPF, the BGP session is also used to advertise UE prefixes into the network. The Juniper Cloud-Native Router has a full BGP stack by which it peers with the 5G CNFs, the AWS transit gateway (TGW) and the on-premises edge gateway running in the service provider data center to exchange routes from both places. To attract traffic from these 5G CNFs and on-premises CNFs via the Cloud-Native Router, it uses AWS VPC APIs to program the internal and external VPC routing tables. This is required as VPCs may not have the routes for destinations in the on-prem network. Another unique capability that the Juniper Cloud-Native Router brings is BGP auto-configuration, where the sessions are automatically set up when a new CNF workload is orchestrated. This topology is replicated in another AZ to provide redundancy on failures in an active-standby mode.

The above diagram illustrates the AWS VPC solution in greater detail. The K8s-workers on the left are part of the EKS cluster hosting the 5G Core CNFs like NSSF, UDM, cloud core resource controller (CCRC) etc. Each of these has their own VIP and must be in different VRFs with traffic isolation between them. In this case, there is a VIP for the OAM VRF (10.10.10.10/32) and another VIP for SIG VRF (10.10.11.10/32). Each worker also has a lightweight BGP speaker to peer with the Cloud-Native Router and advertise the VIPs. There’s another EKS cluster for the Juniper Cloud-Native Router with auto-scale size of 2 where one will be in active mode and the other in standby for redundancy. The Cloud-Native Routers are present in different AZs to provide geographic redundancy as well. They peer with the Transit gateway (TGW) on the right to connect to the on-premises telco data center where the CUs and DUs are present.

Juniper Cloud-Native Router Capabilities

To create this solution, the following capabilities of the Juniper Cloud-Native Router are leveraged:

- Multiple interfaces and transit routing: The Juniper Cloud-Native Router supports multiple interfaces (up to 8) and can route traffic between these interfaces. The eth3 interface in both routers is used to send traffic, including http and https, toward the K8s workers for all of the VIPs. This interface is in the global VRF (inet.0). On the other hand, interfaces eth1 and eth2 are in non-global SIG and OAM VRFs, respectively. The eth0 interface is the Amazon primary CNI interface.

- BGP auto-configuration: No new configuration needs to be added for new BGP speakers, which can come up, particularly in the case of cloud environments.

- Bidirectional Forwarding Detection (BFD): BFD is used to detect sub-second failures with regard to 5G workloads and re-route traffic instantaneously.

- VRF-lite: Virtual routing domains with route leaking are configured to route traffic across different VRFs seamlessly. The prefixes in the SIG VRF and OAM VRF are leaked in the eth3 interface.

- Equal Cost Multipath (ECMP): New K8s workers can be added or deleted on the fly without impacting existing flows. This is called flow affinity or flow stickiness.

- Juniper Extension Toolkit (JET) API: JET VPC APIs are programmed to populate external and internal routing tables from BGP advertisements to attract traffic toward the Juniper Cloud-Native Router from Amazon VPC.

- Asymmetric routing: For optimization purposes and cost savings, there can be some flows from Cloud-Native Router toward K8s workers, but the reverse flows can get routed directly from the Amazon VPC.

- Redundancy: Protocols such as VRRP are used to support active-standby switchover since most of the public cloud does not support multicast, broadcast or unicast.

- MPLS over UDP (MPLSoUDP) Tunnels: In some cases, the Juniper Cloud-Native Router must tunnel the traffic toward an on-premises DC gateway using MPLSoUDP encapsulation. L3VPN routing instances are used in such scenarios and dynamic UDP tunnels are signaled over BGP.

- More control plane features: EVPN-VXLAN will soon be supported for cases where the on-premises PE router does not support MPLSoUDP.

AWS constructs and configurations in this use case:

- 2 x c5.4xlarge (supports up to 8 ENIs)

- Standalone EKS Cluster

- Private Cluster Endpoint

- Self-Managed Node Group

- Amazon VPC CNI

- Elastic block store, container storage interface (EBS CSI) Driver

- XENI from “VPC-VRF”

- Transit Gateway (TGW)

Performance

The performance topology consists of two Cloud-Native Router m5n, xlarge instances peered using BGP with an MPLSoUDP tunnel. A pktgen pod is running in each instance. The Tx+Rx PPS for 8 cores is measured and compared with pktgen running natively on those two instances without the Juniper Cloud-Native Router. As shown in the table below, the Juniper Cloud-Native Router supports line-rate traffic with virtually no impact on performance.

Results:

| Rx+Tx (MPPS) | |

| Without Juniper Cloud-Native Router | 4.48 |

| With Juniper Cloud-Native Router | 4.38 |

Conclusion

The Juniper Cloud-Native Router is a high-performance software router that fulfills the unique and demanding requirements to move 5G workloads from an on-prem data center to public cloud infrastructure. It can be deployed, orchestrated and configured using cloud-native methodologies, making it apt for VPC use-cases. Check out these additional blogs for more information on the Juniper Cloud-Native Router:

- Cloud-Native Routers: Transforming the Economics of Distributed Networks

- Cloud-Native Routing in 5G Deployments