We recently spoke to some of our Juniper Ambassadors (see Part One here) to better understand the dynamics, drivers and concerns they have encountered while designing and running data center networks and fabrics. Our panel discussion focused on what matters most to their organizations and clients, and although no one size fits all, we uncovered some interesting insights. In this blog, we’ll cover the next set of topics: hiring and training for the talent gap, automation and sharing vs. maintaining control of the infrastructure.

A Shortage of Skills and Time

Hiring, Training and the Talent Gap

If one were to ask a network engineer if they consider themselves “full-stack,” they may be somewhat coy but will fundamentally understand a lot more of the whole OSI (or TCP/IP) layers than most (including application behaviors). Network engineers may not write full-blown applications themselves but are increasingly needed to understand and practice software development methodologies for automation and orchestration. Some would argue this has always been the case, yet many are fresh to modern programming paradigms. (Maybe they were a Perl monk or are slowly becoming a Gopher?) Admittedly, simple programming logic has always been table stakes with elements such as route maps, policy inheritance and custom scripting. The demand now is for deeper programming and automation skills related to operations, cloud and security.

Steve notes that when service providers are turning up circuits end-to-end, “that ramp-up of automation skills is important…There’s a lot of training being done for employees,” with many organizations “looking for people with those skills.” Andrew sees mandates from some organizations around upskilling with programming and automation, including a large uptick for cloud-related certifications.

In one sense, there is a desire for even greater customization and malleability, whereas on the other, there’s the growing realization that simplicity requires better abstractions for dealing with accelerating complexity. In data networking, it’s not uncommon for two contradicting ideas to be simultaneously correct. We may well be seeing aspects of deployment simplified while related operational complexity moves elsewhere, thus fulfilling the Sixth Networking Truth. The real test is then how our workflows, automation and systems perform in the face of unexpected input and novel failure modes.

As Jonas notes, “There is a shortage of skills in networking people with automation awareness,” though for the most part, in smaller environments, the need for automation is low and revolves more around the initial provisioning of VLANs.” For these smaller customers, there tends to be less network segmentation and a slower cadence of change. Overall though, he sees a need for better training as “Current education or certification programs are very siloed, so either you get a lot of knowledge about the programming part and you don’t get any network knowledge, or the other way round.”

Automate to Overcome

Platforms, Volume and Velocity

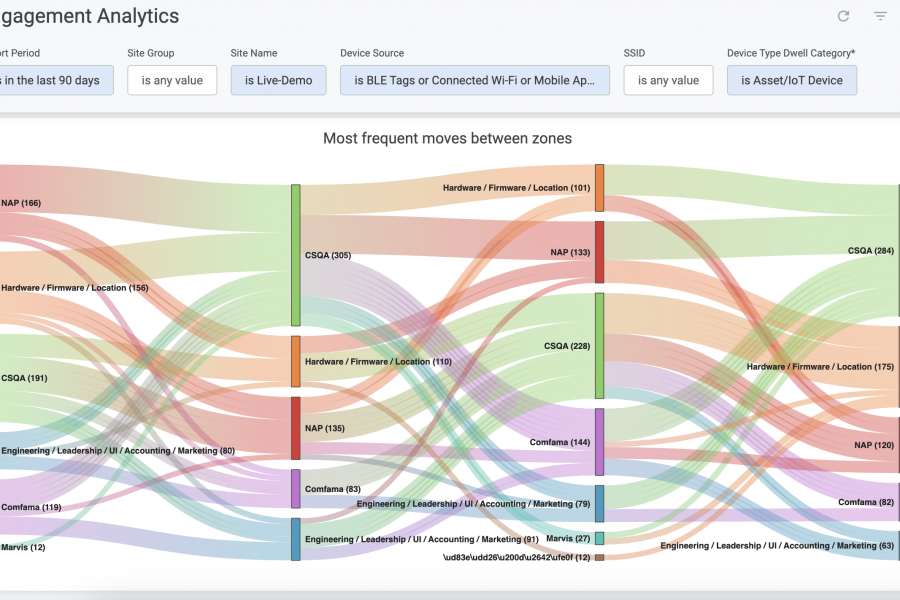

As complexity grows, the burden of managing digital objects and services multiplies. Data volumes and management overheads result in ever-increasing needs for better telemetry and higher fidelity data. Whether it’s for service assurance or security, the velocity of change impacts our ability to stay in control and achieve the right level of situational awareness. We turn to automation to keep pace, but it requires suitable logic, integration and orchestration across systems to realize the real benefits and rewards. One primary goal is reducing human toil. By minimizing time spent on operational overheads and leveraging modern paradigms like intent-based networking and closed-loop validation, talent can be targeted at higher-value initiatives to increase impact.

Steve highlights one challenge around whether or not existing management tools even have the ability to provide or partake in the appropriate automation, whether for workflow generation, orchestration or additional integration with other APIs. He emphasizes that in many scenarios, “time to provision needs to get as close to zero,” as is feasible.

However, Andrew sees something new. “Our demand for automation and speed-up has been driven by something entirely different,” he says. It concerns the need to give customers the ability to steer around issues and control their own data paths at a moment’s notice. This requirement has driven a considerable amount of automation in service offerings while rolling out new self-serve features.

But it’s not just the time and productivity barrier we need to overcome. It’s the human side that requires attention. Michel points out that, “When the tools are getting better, you lose people who really understand what’s, well, under the hood.” This is an often forgotten risk that leverages multiple levels of abstraction. Even though tools become force multipliers, the need remains for those using them to continue to understand the fundamentals during times of need. These layers of abstraction can both help and hinder an engineer depending upon the scenario. Rather than just measuring how well things perform, it’s how well they fail that can sometimes matter more. For both outcomes, “explainability” becomes a key concept, especially when leveraging advanced tools like machine learning.

Full Stack Control

Underlay and Overlay

For many, it’s all about control, and there’s a hesitancy to outsource any elements of the stack. Irrespective of the business type or customers, it’s incumbent upon business owners to understand their own overall risk. Yet, unless you build everything from the ground up yourself (including chips and devices), there’s always going to be some part of the supply chain that implies the outsourcing or out-tasking of processes or platforms. The question is, then, what percentage of the footprint can be managed and how?

Steve points out that it’s not always regulatory, latency or compliance concerns that drive some choices for some small to medium-sized businesses. Sometimes it’s an even more fundamental desire to be able to “touch, feel and control” their infrastructure footprint. There’s an “idea of not letting go, being able to physically control things,” added with some regionality and pride in keeping bits local. This approach enables some hosting and private cloud players to control all facets of the offering while serving customers hyper-locally. For some, trust is built on the ability to, as Steve puts it, “drive up, walk in there and touch the rack!”

Andrew chimes in here to reinforce regionalization as a growing driver. He says he is “seeing a lot of countries now passing very strict data regionalization and data localization laws, which prohibit taking certain data out of the country.” Jonas echoes this but goes further to say that in some geographies, “The focus is on installations that are cheaper than the public clouds.” Hence, it’s actually cost-driven and often related to licensing and CPU utilization.

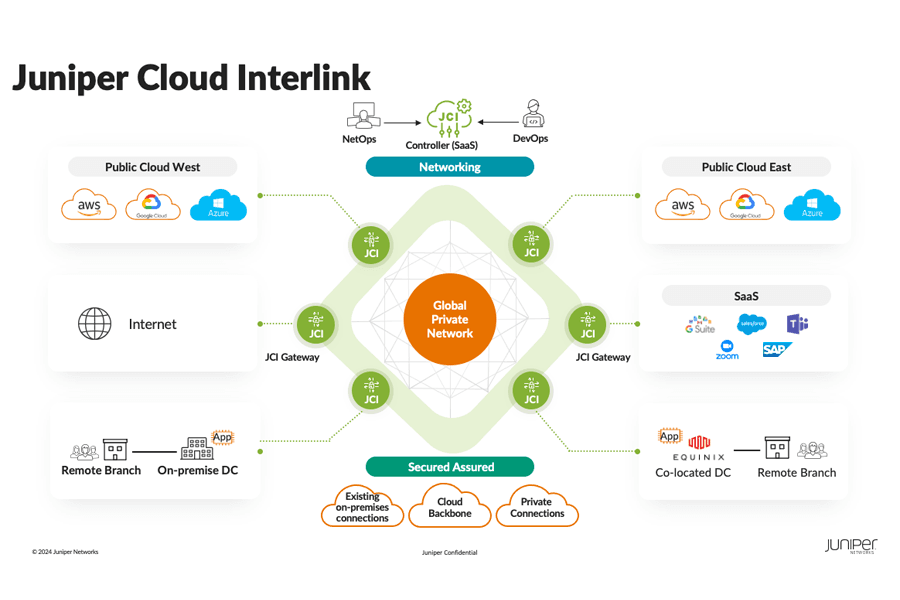

Peace of mind comes in many forms, and for Juniper Networks, the future of data center networking revolves around building trust and confidence while driving down operational complexity. The recent acquisition of Apstra brings a renewed focus on full-lifecycle network automation, leveraging intent-based networking and closed-loop assurance to drive the journey towards a real Self-Driving Network™. By providing a solution that controls both the underlay and overlay, fabrics can now easily scale while the total cost of ownership (TCO) is dramatically lowered.

In the final blog of this series, we’ll share more insights from the Ambassadors on topics such as customer expectations, troubleshooting and the road ahead.