IT sits at the core of an enterprise’s digital transformation and bears the burden to provide an on-demand infrastructure that delivers high uptime and agility. The data center becomes the focal point from where this transformation begins and enterprise data center fabrics need to evolve to meet the evolving needs of the business. Advancements in data center networking technologies can come to the rescue!

Since the early days of VXLAN, the ability to support 16 million subnets has been touted as the killer app for VXLAN. However, for the vast majority of enterprises 4K VLANS are plenty. In fact, the average enterprise probably needs no more than 200-300 VLANS. So then, is a VXLAN fabric something that enterprises need to consider? The answer is an emphatic YES. Let us see why.

THE DATA CENTER NETWORK JOURNEY SO FAR

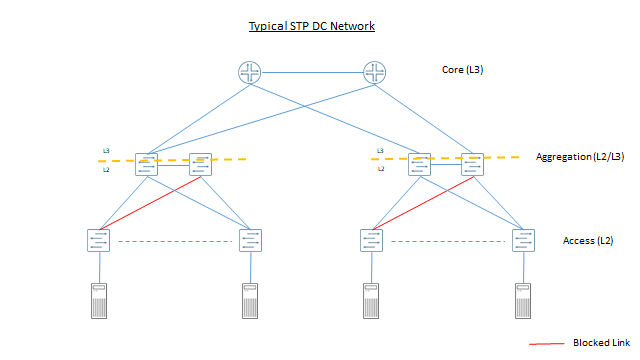

The first generation of data center networks were built using spanning tree protocols. Several extensions followed to optimize flooding and improve convergence. These networks followed the typical 3-tier (access, Aggregation, core) architecture depicted below

Spanning tree protocols make terrible use of network capacity and even with all the extensions are imperfect at solving network meltdowns and convergence issues.

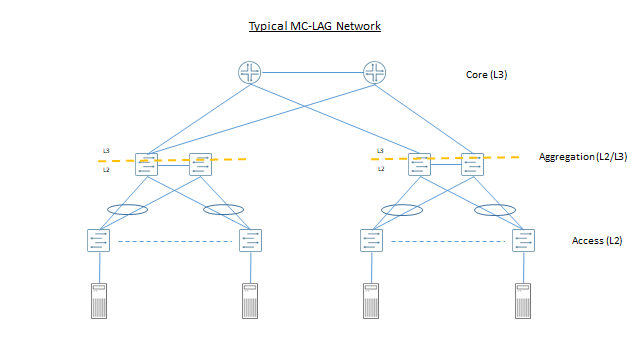

Multi-chassis LAG (and similar technologies) arose from the shadows of STP to resolve the network utilization issues as depicted below

Multi-chassis LAG (and similar technologies) arose from the shadows of STP to resolve the network utilization issues as depicted below

MC-LAG is widely deployed in enterprise, but suffers from the following limitations:

- Proprietary implementations from different vendors means that the implementations are not interoperable

- Solution is limited to 2 aggregation devices and the loss of a single device leaves the network hanging with 50% reduced capacity

While MC-LAG helped with the network capacity problem, it fundamentally did not change the architecture much. STP and MC-LAG are both optimized to serve north-south traffic. The rise of VM’s and containers means that east-west traffic dominates in data center networks today. Since the L3 demarcation points on the network sit on the aggregation layer (and sometime core depending on design), east-west traffic that crosses VLAN boundaries is routed at the aggregation layer. Ideally this traffic should be routed as close to the source as possible to optimize application performance and network utilization.

LEARNING FROM PUBLIC CLOUD

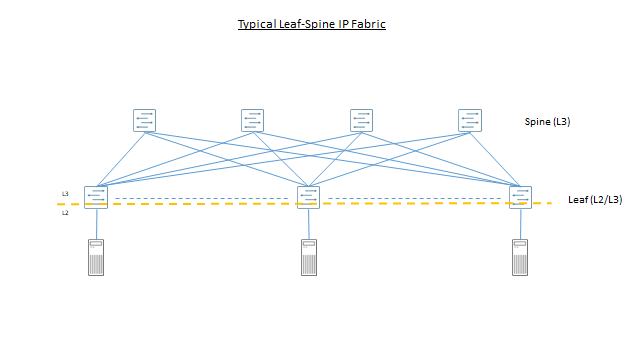

Public cloud providers demonstrated how on-demand infrastructures are built at scale. The building block for such a network is a leaf-spine IP fabric (depicted below).

An IP fabric in the underlay has the following advantages

An IP fabric in the underlay has the following advantages

- SCALE: Using constructs such as BGP, IP fabrics can scale to support tens of thousands of servers. Enterprises will never need that kind of scale, but when architecting the next generation data center fabric, network architects should pick a technology that keeps the door open to future expansion beyond what legacy technologies can provide

- RESILIENCY: IP fabrics can leverage well-established mechanisms such as BFD to improve resiliency around failures. After all, it is the technology that runs the Internet

- RESILIENCY: IP based fabrics can easily accommodate 4 or more spines, so that when a spine goes down, it does not take down 50% of the network capacity

- SIMPLE: Eliminates the use of STP and other legacy technologies in the fabric

- SIMPLE: Public cloud providers have an operations-led mindset. At the scale and automation at which they operate, operations has to be top of mind when making architectural choices. Again IP emerges as the winner

- OPEN: Leveraging open standard technologies, it reduces vendor lock-in and enables multi-vendor deployments

- DC Traffic Optimized: Layer 3 routing moves closer to the source to optimize the path taken by east-west traffic. While this may be on the leaf, it can also move to the compute by extending IP endpoint to a virtual router on the server

While the path to public cloud is almost certain for most enterprises, enterprises are also realizing that the practical end state is likely Multicloud. Economics and application requirements will dictate that some applications are better served from a Private cloud. Lines of business however will demand an on-demand private infrastructure that operates with high availability and enables the business to be agile. Therefore, what has worked for the public cloud can certainly work for the enterprise. An IP based underlay is a strong foundation for private cloud.

BUT ENTERPRISE DATA CENTERS HAVE MORE COMPLEX REQUIREMENTS

However, enterprise IT faces unique challenges that public cloud providers are not burdened with. They are:

- While public clouds have to deal with mostly homogenous virtualized workloads, enterprises carry forward a lot of legacy debt. Enterprises have to deal with both virtualized and bare metal workloads. Some of the applications running on bare metal may be 10-30 years old, but are business critical and cannot be abandoned. Therefore, while public cloud IaaS providers can originate their overlays on the virtualized host, enterprises may not have that luxury.

- Even applications that have undergone the physical-to-virtual (P2V) migration may still require Layer 2 adjacency. Public cloud SaaS providers deploy applications that were born-in-the-cloud and not burdened by the requirements of legacy applications. Moreover, an enterprise data center fabric needs to provide the interconnection between born-in-the-cloud and legacy applications. For example, the web tier for an application designed with modern principles that needs to talk to the legacy applications in the database or logic tier.

- Multi-tenancy is inherent in an enterprise. Again, Public cloud SaaS providers may be exempt from the complexity of implementing multi-tenancy.

VXLAN and EVPN TO THE RESCUE

Enterprises need to deliver a true multiservice data center. VXLAN comes to the rescue and provides the following functionality required by Private Clouds

- Layer 2 adjacency required by legacy applications that are not born-in-the cloud

- Stitch between legacy and modern workloads

- Stitch between virtual workloads (VM’s or containers) and physical workloads (bate metal)

- Any-workload-anywhere in the DC – With an IP-only fabric, since Layer 3 moves to the edge of the fabric (leaf), by definition placement of workload (legacy workload that requires L2 adjacency) or VM Mobility for the workload in a given VLAN is restricted to the L3 boundary. VXLAN provides a Layer 2 overlay that rides on top of a Layer 3 IP underlay and allows placement or mobility of the workload anywhere in the data center or across data centers if needed

Moreover, VXLAN is open standard unlike alternative proprietary solutions that were invented to solve the same problem.

The next design choice is the choice of how learning takes place for a VXLAN overlay. Here we have three choices:

- Data Plane Learning: Flood and learn was the proposed mechanism as part of the original VXLAN draft for the edge nodes (called VTEP’s) in an overlay to learn the MAC address of the remote end points connected to the data center fabric. The downside of this mechanism is that it relies on multicast in the fabric and suffers from the same network meltdown issues caused by flooding that traditional Layer 2 networks suffer from. Aside from a few deployments, this did not catch on in the industry as a popular choice.

- OVSDB: OVSDB emerged as a choice for VXLAN overlay management when SDN controllers are involved. It does not suffer from the flooding issues inherent with data plane learning. SDN controllers are capable of managing overlay edges on both physical devices (hardware VTEP) and on the virtualized hosts (software VTEP). However, the use of OVSDB mandates the use of SDN controllers. Network operators who want to take a phased approach to migrate to an orchestrated private cloud (to mitigate risk) do NOT have the option with OVSDB to build a controller-less private cloud and then phase in an SDN controller as and when the risk appetite allows.

- EVPN: EVPN is a BGP based control plane mechanism for MAC address learning and offers the following advantages:

- It is BGP based! BGP is a well-established protocol that delivers Internet-grade resiliency needed for large-scale deployments and offers a rich suite of policy controls as well. It is a technology familiar to network operators and minimizes the need to learn and deploy multiple technologies

- An overlay built with BGP as the control plane can be operated in controller-less mode as well as with an SDN controlle

- EVPN can be used to build both Layer 2 and Layer 3 multi-tenancy.

- Largely minimizes flooding in the fabric since the MAC distribution happens in the control plane.

If you are convinced that EVPN and VXLAN are the way forward for your enterprise to deliver a modern private cloud, I invite you to explore Juniper’s architecture guide for a detailed dive to deploy such a fabric.