At Juniper Networks, we’re big believers in disaggregating hardware from software and even disaggregating software itself. Needless to say, we’ve followed the evolution of Microsoft’s Software for Open Networking in the Cloud (SONiC) with great interest. Microsoft originally designed SONiC to meet its own requirements as one of the world’s largest cloud providers. They wanted a way to build and manage a high-performance, highly available, secure cloud network, where they could use the same disaggregated network operating system (NOS) regardless of the underlying hardware. SONiC proved it was possible to construct a network operating system that could tackle the fabric demands of cloud data centers, which was then made available to the broader networking community as an open-source cloud-optimized NOS, along with the SONiC Switch Abstraction Interface (SAI).

We think this kind of software disaggregation is just sound engineering—which is why disaggregation plays a key role in Juniper’s strategies for cloud, multicloud and distributed edge cloud solutions. It’s also why we continue innovating with SONiC, combining its strengths with our own networking expertise via native integration with Juniper hardware. We see this as yet another way we can further our commitment to disaggregation and provide our cloud and service provider customers with open programmability, choice, automation and a broad network ecosystem.

SONiC has come a long way in just a few short years, but we aim to take it even further—beyond fixed system IP fabric deployments. Our goal is to make SONiC ubiquitous throughout the data center, WAN core and edge. Now, we’ve taken major steps in doing it by implementing multiple packet forwarding engines (PFEs) in SONiC platforms. By bringing SONiC to multi-PFE chassis, we can provide a simpler, better-performing network solution for the most demanding cloud and service provider environments—without sacrificing the flexibility of an open, disaggregated NOS.

Evolving SONiC to Support Multi-PFE Platforms

There are many advantages to a multi-PFE SONiC platform, such as the Juniper PTX10008. Systems with multiple ASICs provide superior switching performance and huge FIB scale, often handling 2 million or more FIB entries. They offer greater WAN port density with minimal cabling complexity. You know the rack space and power consumption budget in advance. Multi-PFE platforms may support high speed (100G and 400G) Ethernet interfaces. They may also offer advanced features like line rate MACsec, MPLS and field-replaceable units, or FRUs (that is, hot-swappable devices).

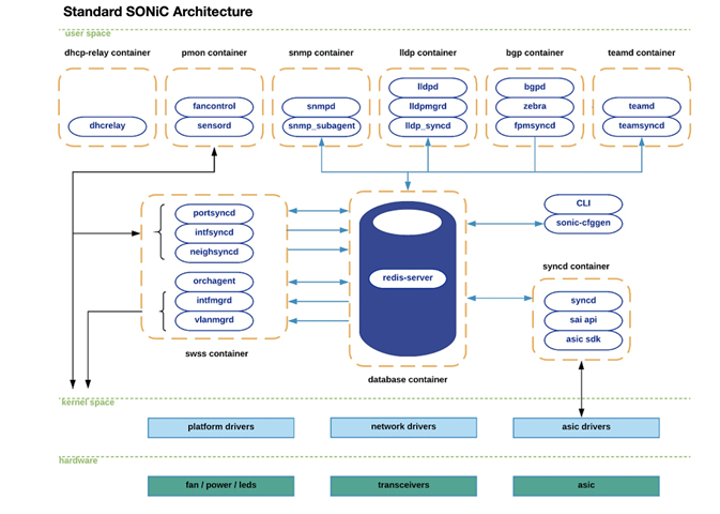

Of course, shifting from a single- to a multi-PFE chassis does introduce some changes. Let’s take a look at this evolution, starting with the standard SONiC architecture as shown in Figure 1. As illustrated, SONiC is typically deployed on a fixed-form platform with a single networking ASIC, such as Broadcom Tomahawk2. The system runs Debian Linux, with several Docker containers providing the SONiC infrastructure and routing services. The process “syncd” programs the networking ASIC through the SAI. In the standard model, SAI invokes an SDK provided by the ASIC vendor. (You can see this in Juniper’s QFX5200 and QFX5210 platforms, which use the standard SONiC architecture.)

So, what happens when we apply the standard SONiC architecture to a multi-PFE chassis? First, the optics and cables that would typically be used to connect multiple SONiC switches get collapsed onto copper traces between line cards in a chassis. This provides power and heat savings, as well as cleaner connections by limiting the cabling required. We also now get the benefit of shared power and cooling. So far, so good.

There are presently two approaches to running SONiC on multi-PFE architectures. The first is to approach each line card as a discrete routing device using protocols familiar to network engineers such as OSPF/ISIS/BGP in much the same way as a discrete fabric. Management of the overall system remains as complex as if you were using multiple devices and removing a “line card” effectively removes an entire SONiC node from the network. Even worse, in WAN and DC deployments that utilize chassis systems in their designs as the number of deployed chassis grows the amount of routing protocol state also grows as a factor of the number of line cards installed in each system. As additional features are added to SONiC over time, networks that rely on IGP protocols like ISIS and OSPF could be impacted by the explosion of additional nodes in an area (each line card is its own system), which may impact the scalability and stability of those networks. Data center spine platforms typically utilize multiple packet-forwarding engines, each using one or more networking ASICs to maximize parallelism and switching throughput.

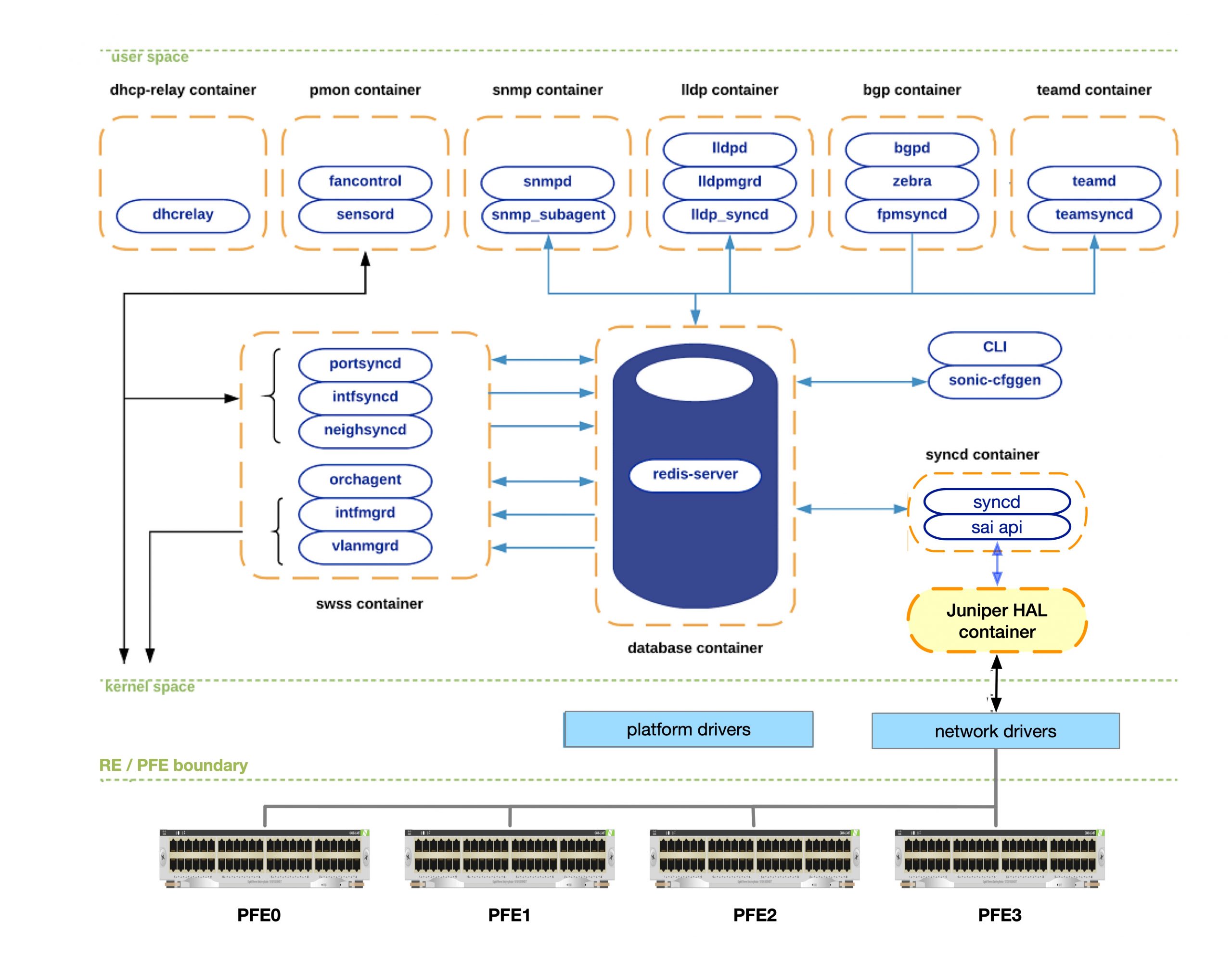

To Juniper, these problems looked like an opportunity. So, we created a solution that brings all the benefits of SONiC to WAN core and data center networks: multi-PFE SONiC. You can see what this looks like in the PTX10008, Juniper’s newest 400G modular chassis, which runs multi-PFE SONiC. Figure 2 illustrates the architecture below.

When comparing the standard architecture with Juniper’s multi-PFE architecture, note first that the user space portions are almost identical, except for one difference. In the standard model, SAI invokes a vendor-provided ASIC SDK. In multi-PFE SONiC, SAI uses the Juniper hardware abstraction layer (HAL), highlighted in yellow. Another difference is that networking ASICs are integral parts of flexible PIC concentrator (FPC) cards, which are attached to a hardware fabric. Finally, a third difference is that the system is really now a cluster within a chassis. SONiC runs on one Linux node (called the RE). Each line card is also a Linux node. Now, removing a line card only results in losing ports, not a SONiC node.

Together, these features provide the simplicity, manageability, resiliency, performance and scale required in data center spine and WAN core networks—deployments that are not well served with traditional disaggregated SONiC implementations.

Hardware Architecture Makes a Difference

When running SONiC, cell-based fabrics such as those in the PTX10008 will chop up and spray packets across the fabric cards (spine nodes) very efficiently. Compare this to the flow-based ethernet ECMP mechanism in the standard deployment, which can result in unequal fabric link utilization depending on the average flow size, and where those flows are hashed across the fabric. A cell-based design allows operators to run the system at a higher overall utilization without worrying about dropping traffic on a single overutilized fabric link in the backplane or fabric.

Typically, ethernet-based fabric systems are designed to account for this. As a result, most such systems do require a certain level of monitoring to expose critical utilization counters. These types of counters require the operational vigilance to know which thresholds are important to monitor, ensure they are properly monitored (not just after an outage), and are correctly alarmed within the operator’s monitoring infrastructure. (Don’t worry, we’ve made this task easier too).

Monitoring a Multi-PFE SONiC Platform

System abstraction is a key SONiC advantage. Because SAI hides the low-level platform details, SONiC attempts to make the platforms it’s running on appear homogeneous, even though the underlying hardware may differ greatly (as we have seen when comparing the standard and multi-PFE SONiC architectures). This homogeneity, which is usually a SONiC strength, can sometimes be a problem, as hiding low-level platform details can make system monitoring and troubleshooting much more difficult.

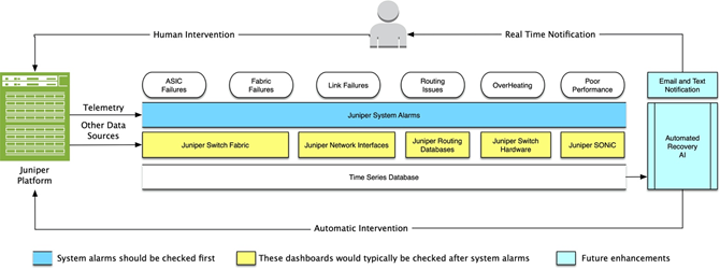

To remedy this monitoring issue, Juniper has not only added new information to Redis (available through SONiC telemetry), but we have also created new SONiC CLIs to reveal some of this previously hidden information. Finally, Juniper provides an integrated, graphical real-time system monitor on all multi-PFE SONiC platforms.

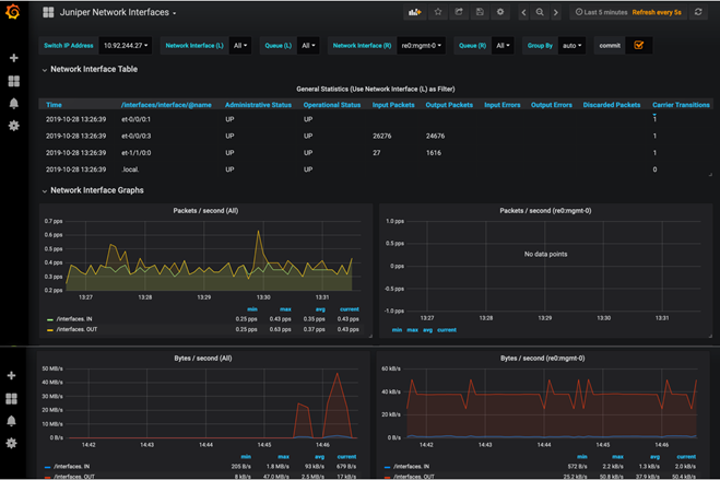

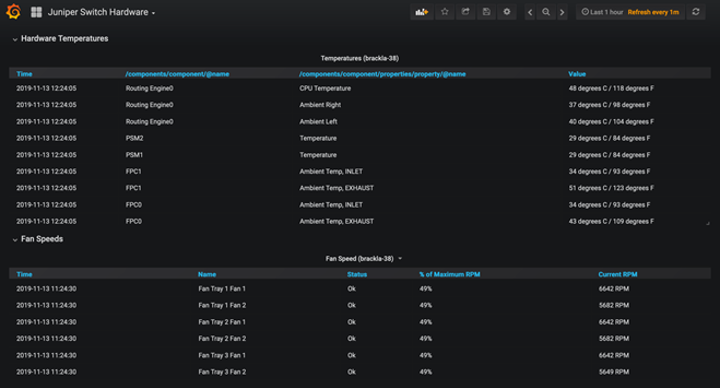

The concept behind the real-time system monitor is straightforward. Using only a web browser, the system administrator must be able to quickly and thoroughly ascertain system heath and easily identify problem areas within seconds. By accessing the real-time system monitor, the administrator can view system alarms, hardware temperatures, fan speeds, power consumption, CPU and RAM utilization, network activity, fabric status, RIB and FIB entries and SONiC port mappings. Figure 3 shows the real-time system monitor architecture. Figures 4 and 5 are sample screen shots from the interface.

Extending and Building on SONiC

Building multi-PFE chassis support is just one of the ways we’re looking to take SONiC further. Juniper has also added support for MACsec and MPLS, both required to expand SONiC deployment across the infrastructure. We’re also taking advantage of the recently introduced SAI API. Not only have we ported an open-source MACsec control-plane WPA supplicant into SONiC, we also enhanced the software with advanced features such as XPN and additional cypher suite support. These features are critical for today’s high-speed interfaces.

Ultimately, as exciting as the current version of SONiC is, it focuses primarily on switch and IP connectivity—leaving a big hole when it comes to WAN support due to its lack of MPLS. So, we’ve added complete software support for MPLS from configuration to the SAI. In addition, using our cRPD cloud-native routing stack in SONiC, all types of MPLS routes can be programmed, even with ECMP support.

Clearly, we at Juniper are big fans of SONiC and its potential to bring more choice to more parts of the network. By combining SONiC with our own innovations and expertise, we can help our customers extend open-source flexibility as far they choose to take it.

Additional Information

Look for future blogs on this subject, including an in-depth look at Juniper’s cloud-native routing stack available for SONiC-enabled hardware. We’ll explore how we can provide a highly performant, feature-rich and more manageable and programmable routing stack compared to the default open-source routing stack typically bundled with SONiC.

In the meantime, check out Juniper’s Disaggregation Playlist on YouTube, which contains demonstrations of Multi-PFE SONiC, MPLS, and MACsec on SONiC, among others.

For more information on Juniper PTX10008 Packet Transport Router, refer to this data sheet.

For more details on Juniper’s commitment to disaggregation and its benefits, please visit this blog.