A Kubernetes Container Network Interface (CNI) does not a cluster network make. It’s often said that a problem well stated is half solved, and it’s for this reason that when cluster architects and operators think of solving for networking as simply CNI, they often cut themselves off at the knees before they’ve even begun.

In launching CN2, Juniper Networks’ cloud- and Kubernetes-native SDN, we strove to emphasize this point and how CNI was a reductionist viewpoint—hence the “SDN” moniker, imperfectly interpreted as it may be. Now, some readers may know that pod interconnectivity is the main job of a CNI implementation. They may also know that services networking and access control “NetworkPolicy” are additional basics implemented by kube-proxy and commonly referred to as a CNI. But what does it mean to go beyond that definition? Here, we’ll dig into what that means with CN2, why it’s important and where it’s beneficial. By focusing on the most basic concept in CN2 (its virtual networks), we’ll see how its fundamentals are very powerful compared to today’s run-of-the-mill CNI.

Escaping 2D Kubernetes Networking

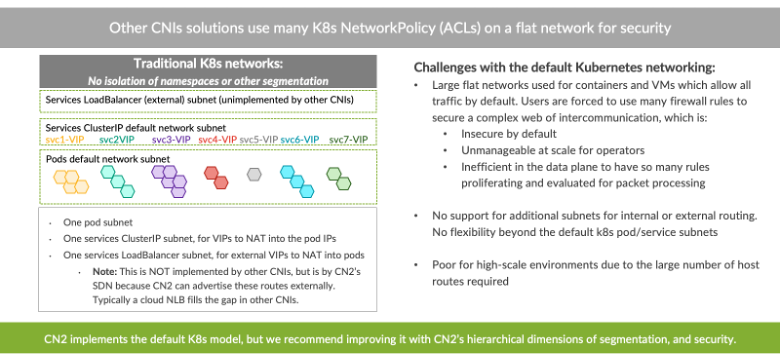

The essential challenge with Kubernetes networking is that it’s “flat,” so to speak. That means there is one subnet for all pods, one subnet for all intra-cluster services, and one subnet for externally-exposed cluster services. This last type is called a LoadBalancer or Load Balancer Ingress (not to be confused with Kubernetes Ingresses). It isn’t implemented by the mainstream open-source CNIs because it needs to program a load balancer or router of some type outside the cluster, like a cloud provider network load balancer. While the load balancer functionality is indeed something covered by CN2, the other two flat subnets are more interesting to examine.

Because CN2 was made off the back of Contrail Networking inside a networking vendor that poured a decade of innovation into it, there’s much more networking flexibility with its virtual networking and policies for security rules and network topologies. With CN2, virtual networks are its building blocks.

CN2 virtual networks are commonly associated with a subnet, although they can share subnets, too. Native IPAM is also included for subnets. Virtual networks are a vehicle for intrinsic network isolation, so subnets can overlap. CN2’s overlay encapsulation on the IP network substrate between the cluster nodes makes this possible, although overlays are optional, too, with certain caveats when omitting them.

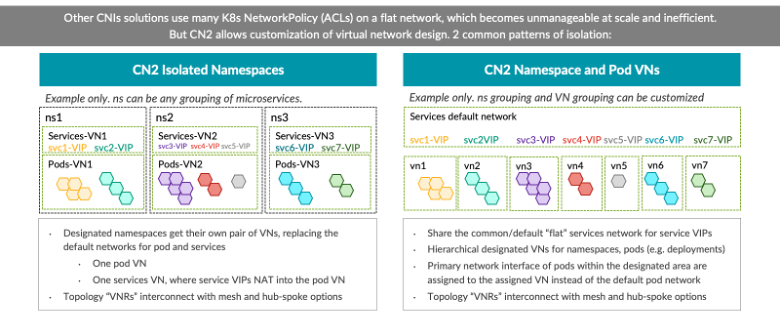

With this basic virtual networking building block, CN2 facilitates endless customization of virtual network design. One possibility is isolated Kubernetes namespaces, where designated namespaces would get their own pair of virtual networks, one for pods and another for services. Another common design employs the default flat service network and subnet but puts every, or some selected, microservices into their own virtual-network microsegments with per-namespace, per-deployment, or even per-pod flexibility achieved in practice with Kubernetes annotations.

Multi-dimensional Segmentation

Compare the elegance of a dedicated virtual network per microservice deployment or namespace to the single flat-network mess—especially at scale—of only Kubernetes NetworkPolicy security rules to manage isolation and allowed interconnectivity. Managing security-rule isolation layered into virtual-network isolation provides more manageable multi-dimensional segmentation: broad-strokes network isolation and refinement upon those segments with finer-grained microsegmentation policies, such as denying pod-to-pod traffic within a network or across connected networks.

To interconnect virtual networks, CN2 can further facilitate interconnectivity patterns like meshes and hub-spoke patterns, which align to common application microservice patterns of communication. This approach is much easier and simpler to visualize and configure, and, like everything in CN2, configuration is done as custom resources. As such, CN2 is provisioned in whatever kubectl or K9s controlled way or as-code mechanisms operators would use to manage the rest of Kubernetes.

With an understanding of CN2 virtual network and topology building blocks and why they offer simpler abstraction and management of interconnectivity policies, let’s look at where these are useful in the real world.

Solving Problems at Scale

First, security should come to mind because we’ve spoken at length about isolation. As clusters are increasingly multi-service, multi-application, multi-team, multi-tenant, etc., they are multi-purpose in many ways. Multi-dimensional segmentation helps to slice and dice a cluster to the exact shape of the dimensions for multiple purposes.

Second, it’s difficult to accept a flat pod and service network from a networking and security standpoint. Often, service or pod endpoints need to be routable from outside the cluster. It’s alarming to expose entire large subnets for the sake of a few endpoints. And it’s worse to manage the distributed routing of pod and service subnet IPs dynamically in a physical data center, dealing with its routing protocols down to the server nodes. Yet, these are the real difficulties that security and networking teams face when they use other CNIs—again, especially at scale.

CN2 has overlay virtual networking agnostic to the underlay and centralized, open-standards BGP signaling to cluster gateways, like routers. This makes a meaningful difference in the cloud due to per-node IP exhaustion, but in bare-metal or even virtualized private data center environments, CN2 is massively simpler for networking integration than other CNIs. It vastly simplifies and secures the ability to route into the cluster and even does so more efficiently. For example, a NodePort service which lands the traffic randomly on a node, forcing the cluster compute to do the work of redirecting ingress packets, is really a contrived and thus obsolete concept in a CN2-powered cluster (to be clear, it supports that too, but isn’t recommended).

Third, not only is CN2 useful to federate virtual networks via gateways for routing reachability directly to a chosen pod or service (which is very useful for a Kubernetes Ingress service) but federating virtual networks between multiple CN2 instances also simplifies multi-cluster networking. CN2 makes that network federation easy. Moreover, CN2 can act as the CNI and SDN for multiple clusters simultaneously (up to 16), pulling off some unique cluster-spanning acrobatics to which no other CNI can lay claim: stretching virtual networks across them, obviating the need for federation as yet another option.

Beyond the Amuse Bouche

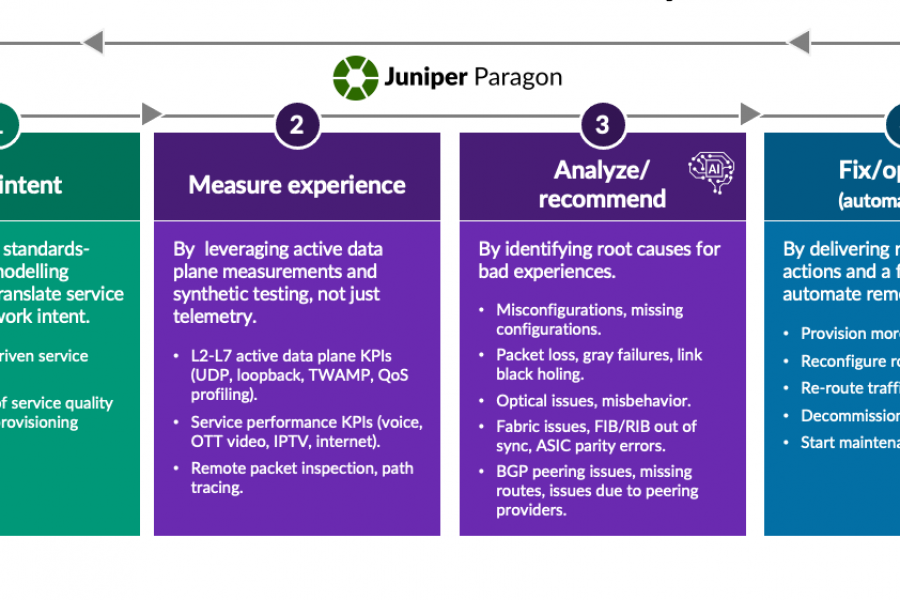

As we’ve outlined in this blog, CN2 has more to offer in basic networking flexibility than one’s biggest Wusthof knife block – but we’ve only laid the foundational concepts here. CN2 has more to offer in terms of telemetry and flow analytics, troubleshooting and assurance and more advanced networking and security features, from multi-interface, multi-network pods to network-aware pod scheduling to SRIOV data-center underlay network automation.

Let’s leave that for another time, maybe at KubeCon, for example. Join us at Booth S50 in Detroit, MI on October 26 – 28. Alternatively, you can also check out Juniper’s CN2 free trial to get your hands dirty.