Do you remember the early days of shopping online?

I’m not talking about the crippling fear that you might send your credit card number into a digital abyss and you’d end up with zero product at your doorstep. I’m talking more about the fact that everyone seemed perfectly fine with shipments arriving sometimes more than a week after ordering. It was a novelty just to have something shipped straight to your home.

These days, retailers have cut that down to two-day, and even two-hour, delivery. Our expectations for service delivery have become immediate, automated and accurate. Any latency in the delivery means that the “Where’s My Stuff?” button on Amazon.com gets some extra love. Retailers have invested billions in making sure consumers are happy.

The story is repeating itself in the service provider/telecommunications market where expectations for service delivery are outpacing the abilities of current infrastructure. Without further investment, consumer and business expectations for all services to be delivered at high speeds 24/7 without error are simply unrealistic.

Something has to change. And it’s all about living life on the edge.

With 5G deployments on the horizon, service providers are seizing the opportunity to transform their entire network toward distributed clouds that address the need for localized services, lower latency applications and bandwidth optimization.

The edge in particular has been a focus of distributed cloud architectures, providing compute and storage at physical locations, such as base stations and central offices. With thousands of sites located in their regions, service providers have invested billions in buildings, equipment and infrastructure. While the proximity to the users should be a huge competitive advantage for the service providers in delivering rich low-latency applications, this high-value asset remains largely untapped thus far.

This was validated in my recent conversations with service provider CTOs around the world, who have identified the “edge cloud” as a means of deploying advanced services in advance of 5G deployments. These CTOs stated that in addition to the edge cloud infrastructure playing host to service provider VNFs, they are also looking to monetize these platforms by opening them up for enterprise and OTT customers to run rich, latency-sensitive and interactive applications, such as IoT, connected cars, interactive games and VR/AR.

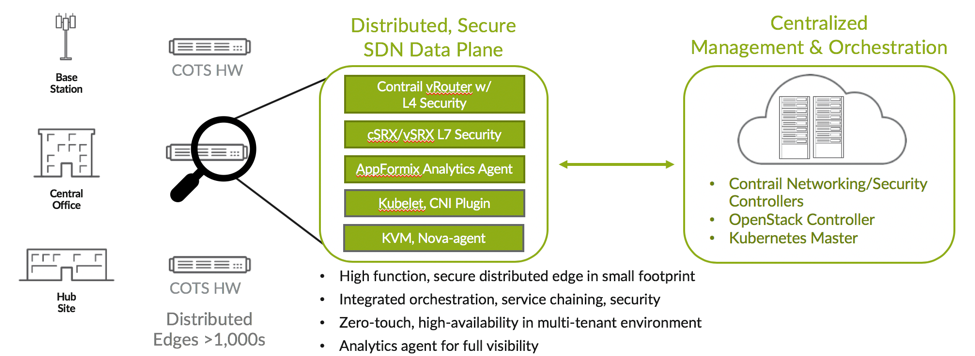

As such, the “edge cloud” should also be considered a key part of the broader service provider transformation toward distributed clouds. Much like hyperscale cloud infrastructure, I believe distributed clouds will leverage OpenStack and Kubernetes combined with highly-scalable SDN platforms with the same heightened level of integrated automation, virtualization, programmability and security. But, this all needs to be enabled on a very light footprint that fits within the highly precious and confined space/power envelope of the edge locations. Distributed clouds must also intelligently orchestrate and automate provisioning and operation of virtual, physical and container network functions. And, in order to meet service provider revenue needs, the distributed clouds offer support for third-party VNFs and open APIs for interoperability.

To that end, here are Juniper Networks’ five steps to deploying the edge cloud.

1) Very small footprint: the number one priority is to optimize for space/power constraints commonly found in edge deployments without sacrificing rich functionalities of a multi-tenant secure cloud. It’s vital that edge cloud balances the requirements of available compute power, service delivery, orchestration, intelligence and security in lightweight environments.

2) Multi-tenancy: sparse edge compute resources will be shared by both infrastructure applications and high-value customer services. In these dense multi-tenant environments, it is essential to have strong isolation and effective application of security controls. To ensure service continuity, the detection and remediation of hotspots in the deployment is crucial to prevent a “noisy neighbor” from canceling out the benefits of deployment at the edge.

3) Orchestration: the challenge of managing services across highly-distributed infrastructure is the sheer task of managing each site. With a single pane of glass orchestration interface, service providers can define the service and policies once and apply it everywhere. This also enables all physical and logical elements – servers, switches, VMs, virtual networks, containers – to be monitored simultaneously.

4) Security: edge cloud, with all its benefits, substantially increases the infrastructure surface, close to the Internet edge, that is open to attacks. It will be imperative to establish a firm security perimeter with integrated visibility, telemetry and network policy enforcement. It should include adaptive firewall policies and tag-based ability to enforce security policies across the entire software stack, including virtual machines and containers.

5) Open/Interoperability: with multiple orchestration stacks and a focus on flexibility and reliability, edge cloud will require a commitment to open source, such as Tungsten Fabric and the Linux Foundation’s Akraino Edge Stack project. Similarly, the inherent nature of the edge will require interoperability between multiple vendors with open APIs that ensure stability and high performance.

Edge cloud is not just a technical exercise for network architects interested in the nuanced approaches for OpenStack and Kubernetes (as cool as they are).

Gartner states that by 2022, “30% of communications service providers that have deployed 5G will also have deployed edge computing services”[1] and that “50% of enterprise data will be created and processed outside a traditional centralized data center or cloud”[2]. These two stats alone should reinforce why virtualizing the edge infrastructure and operating it on an edge cloud is going to be fundamental to how 5G gets rolled out.

This is why we’re announcing the availability of Juniper’s Contrail Edge Cloud.

Contrail Edge Cloud is a software-defined, virtualized solution that leverages a very small footprint optimized for space- and power-constrained edge deployments, without sacrificing the rich functionalities of a multi-tenant secure cloud.

Contrail Edge Cloud is an agile orchestrator for the edge that leverages Contrail Networking, OpenStack, Kubernetes, cSRX/vSRX and Contrail Security, as well as Contrail Insights for analytics. It features several industry-leading innovations that no other production-grade edge solution currently offers, such as the SDN capabilities of Contrail Networking and Contrail Security augmented by Kubernetes to provide advanced container networking, security and service chaining.

With improved service delivery at the edge, service providers can deploy dynamic, low-latency microservices closer to their customers, delivering the right amount of “stickiness” for retail subscribers and expanding new business services for their enterprise customers. Lastly, Contrail Edge Cloud delivers integrated security and analytics to help keep the entire network safe and reliable.

Contrail Edge Cloud is a highly- innovative offering for service providers who want faster, more agile, automated service delivery. With this solution, service providers of all types will now be able to unleash the enormous potential of the edge as they transform their networks to a secure, automated distributed cloud.

[1] Gartner Report “Market Guide for CSP Edge Computing Solutions”, published March 2018.