The network is changing. More organizations are embracing cloud and edge computing models to make applications more robust, delivering the performance necessary in an experience-first world. Today’s applications have stringent performance and latency requirements. They are also containerized and distributed across data centers, decoupled from underlying infrastructures, resulting in a massive increase in east-west communications. As a result, organizations need to look for efficient ways to deliver applications at scale with the necessary levels of performance and security. The network plays a key part in this new world.

Cloud-Native Networking

A data center network fabric comprises a set of ToR/Leaf and spine switches that connect to servers through network interface cards (NICs). The switches typically implement Layer 2 and Layer 3 forwarding functions, providing value-added features such as overlay networking (EVPN-VXLAN). However, Layer 4-7 services, used to support important application, security and management functions, have been outside the realm of these physical switches – these layers are typically implemented in separate devices (hardware or software-based) within the network.

The rise of virtualization and disaggregation and the move to containerized microservices has resulted in new traffic patterns within and across data center hosts. An increasing portion of traffic is intra-host, which comprises inter-VM and inter-container packets that never hit the external link of the NIC or the ToR/Leaf switches. However, this traffic is still subject to Layer 2-3 and Layer 4-7 traffic forwarding function rules just as with inter-host (cross-fabric) traffic.

These functions are traditionally implemented through x86-based networking – e.g. a software load balancer implemented as a VM or a containerized function in a server’s x86 CPU cores. Packets traverse these network functions as part of the intra-host, inter-VM or inter-container traffic management.

With the advent of Kubernetes-based workloads, traffic patterns have become quite complicated. NAT functionality on hosts, hair-pinning of traffic and load balancing across a Kubernetes cluster are illustrative examples. Securing pod-to-pod traffic through Mutual Transport Layer Security (mTLS) is another wrinkle in this new world. In addition, when container pods are hosted within node VMs, overlay encapsulation and related management can create new networking complexity and demands. Lastly, firewalling functionality to protect service-service communication is increasingly a requirement.

In addition to realizing network policy, we now assume the network should take responsibility for L2-L7 functionality by extending its reach into the server to run networking services (e.g. load balancer, L7 proxy, firewall, etc.).

Enter the SmartNIC

That’s where the DPU and SmartNIC come in. The DPU (data processing unit) can handle these L2-L7 functions, allowing the server CPU cores to focus on application processing and not be encumbered with data center networking, storage and security tasks. Gartner predicts that by 2023, one in three NICs shipped will be a SmartNIC (or function accelerator cards [FACs]).

Powered by DPUs, these SmartNICs are effectively platforms that provide services at the “network edge”, i.e. before the traffic hits the server. One example of a DPU is the NVIDIA BlueField-2, which combines Arm cores and additional security accelerators with an integrated ConnectX-6 Dx networking ASIC (from the NVIDIA ConnectX family).

Network functions and firewalls, such as Juniper Networks’ Virtual SRX and the Containerized SRX, can be accelerated by DPUs. Such functions can run on the x86 or the DPU ARM cores and exploit the acceleration capabilities of the DPU ASIC for both networking and security functionality.

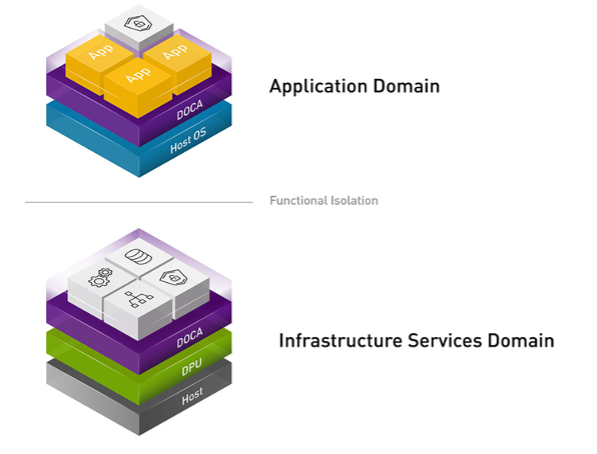

To exploit these acceleration capabilities, it’s necessary for the offloaded network functions and local network and security services to communicate with the ASIC using an API, such as NVIDIA’s Data Center on a Chip Architecture (DOCA) SDK. With the DOCA SDK as the foundation of accelerated network and security applications and the DOCA runtime used to support such applications, we can realize the goal of a secure, accelerated network edge with the DPU-based SmartNIC. See Figure 1 above for an illustrated example.

Extending the networking (L2-L7) to the server through DPU-powered SmartNICs will create several exciting possibilities for networking and security. It will make the network more agile while delivering higher performance and security for newer cloud-native applications, among others. The data center and the network within are indeed changing and the DPU is enabling this transformation.